Among the many strengths of WordPress is the massive number of available plugins. There are tens of thousands, and those are just the free offerings. They handle all sorts of functionality, from security to image galleries to forms. Just about everything you could possibly want for your website is only a download away.

But it is the rare plugin that is so well-crafted and useful that it inspires a number of companion offerings to use along side of it. In many cases, they are among the most popular plugins out there. So popular and well-liked, in fact, that they have developed their very own ecosystems.

Today, we’ll take a look at the concept of WordPress plugin ecosystems. Along the way, we’ll show you some examples and discuss the advantages (and disadvantages) that come with adopting them into your website.

Prime Examples

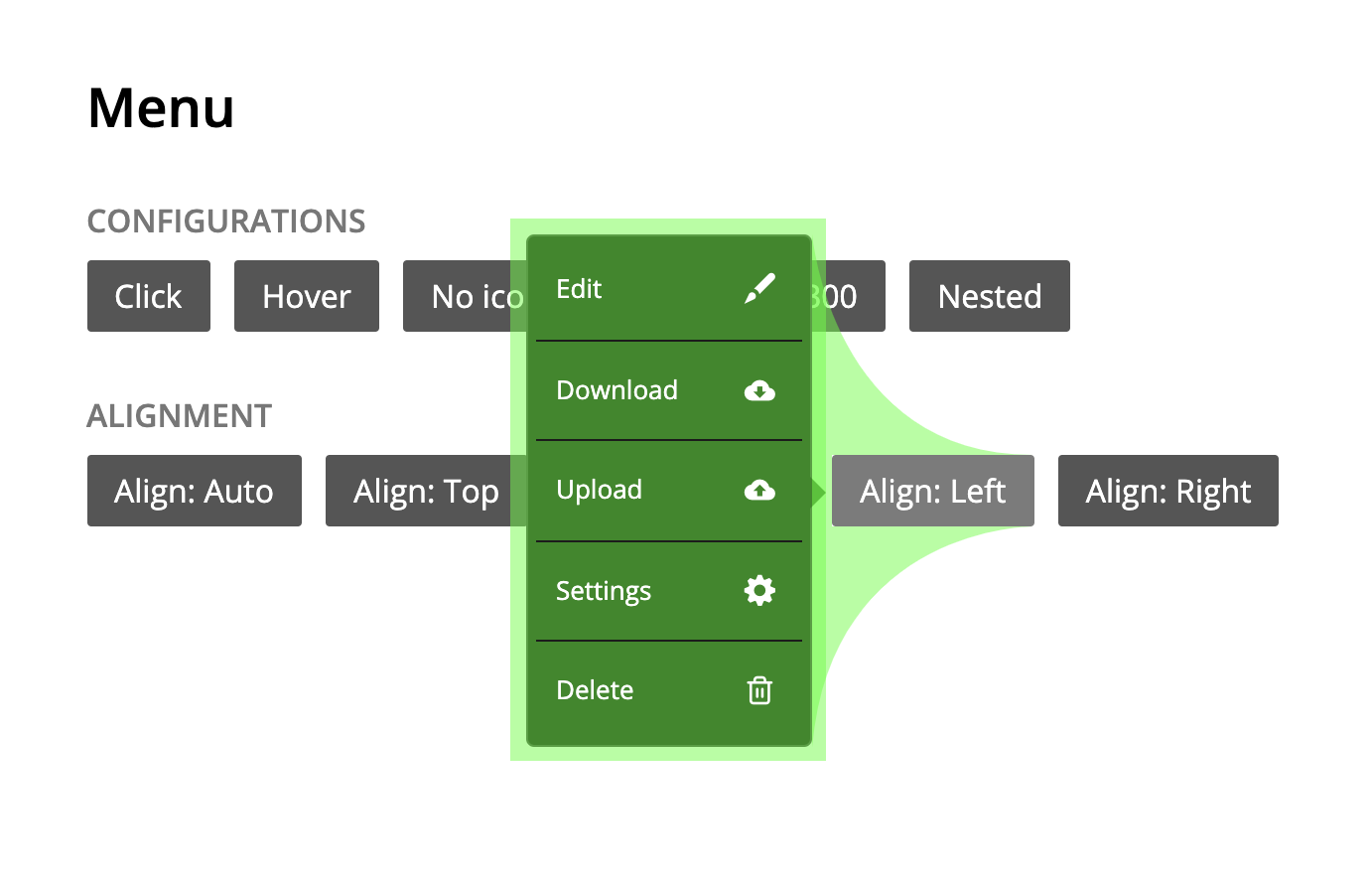

Before we dig too deeply into the pros and cons, let’s see what a plugin ecosystem looks like. For our purposes, we’ll define it as such:

- A “base” or “core” plugin that works on its own, but also has multiple add-on plugins available;

- Add-ons may be created by the original author, or by outside developers within the WordPress community;

- Can be free, commercial or any combination thereof;

In short, this means that the term “ecosystem” is rather flexible. It might be that a plugin’s author has created the base and all add-ons themselves. Or, other developers out there may have decided to build their own extensions. Either way, we have a group of related plugins that can scale up functionality based on need.

Here are a few prime examples we can use to better illustrate the concept:

WooCommerce

Perhaps the most well-known plugin ecosystem, WooCommerce turns your website into an online store. The core plugin adds shopping cart functionality and related features that go along with it for things like shipping and accepting payments. However, it is capable of so much more.

Through the use of add-ons (WooCommerce refers to them as “extensions”), you can leverage the cart for all sorts of niche functionality. Among the more basic features are the ability to work with a wider variety of payment gateways and shipping providers. But you can also add some advanced capabilities such as selling membership subscriptions or event tickets.

Gravity Forms

Here’s a great example of a plugin whose ecosystem has taken a core concept and expanded it immensely. Gravity Forms is a form-building plugin, which already includes a lot of advanced functionality. Yet add-ons allow it to perform tasks well beyond what you’d expect from your standard contact form.

Through a community that both includes and goes beyond the plugin’s original author, add-ons allow for a number of advanced tasks. You can accept payments, run polls or surveys, connect with third-party service providers, view and manipulate entry data and a whole lot more. It may one of the best examples of how an ecosystem provides nearly endless flexibility.

Something to Build On

One of the biggest advantages to buying into one of these plugin ecosystems is that you can add what you need, when you need it. Think of it as a building. The base plugin provides you with a solid foundation (and maybe a floor or two). Then, you can add as many floors as it takes to fulfill your needs.

Sometimes, that first core plugin is all you need. But even then, you still have the blueprints to build upon should you want to expand later.

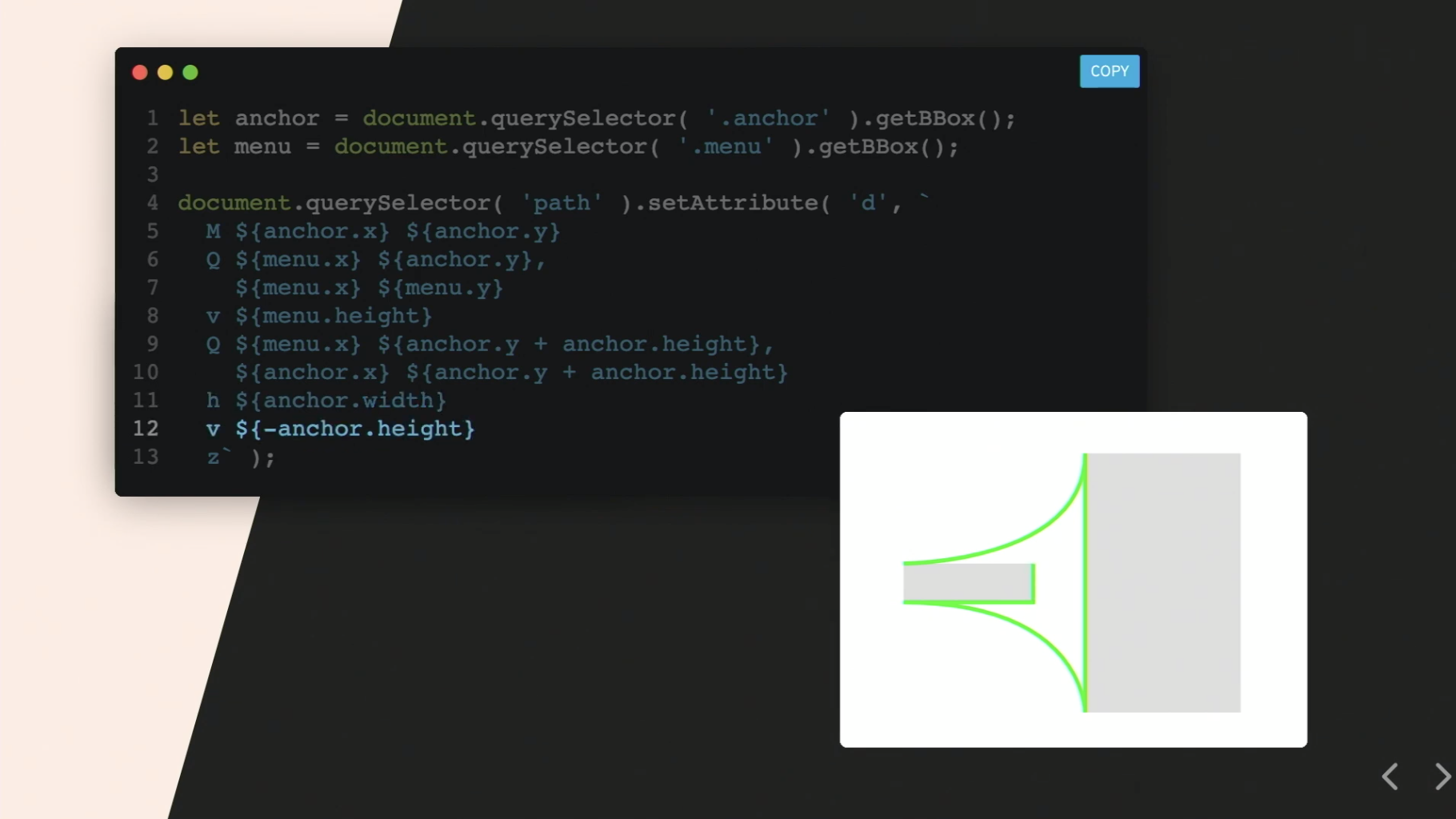

Another potential benefit is that these plugins tend to have been built with expansion in mind. That means that you don’t necessarily have to rely on official or even community-based add-ons. If you have some programming knowledge, you might be able to add functionality by building it yourself.

Plus, by utilizing a related set of plugins, you can avoid one of the more frustrating parts of WordPress site development. So often, we attempt to bring many disparate pieces together to form some sort of cohesively functioning website.

This often means using plugins that were never meant to necessarily work together, which can lead to problems when attempting to make it all run seamlessly. In theory, this shouldn’t be an issue when you tap into an ecosystem.

Potential Drawbacks

Despite the many advantages to using a set of related plugins, there are some possible downsides to consider. Among the most common:

It Can Get Expensive

For plugins with commercial add-ons, you may find yourself being nickeled and dimed for each and every piece of added functionality you’d like to add. WooCommerce is a classic example, where each official add-on requires a yearly investment. That’s not to say it’s not worth the cost – it very well may be. Rather, it is a potential obstacle for the budget-conscious.

Not Everything You Want Is Available

This is something you’ll want to check before making any decisions as to how you’ll build your site. It may be that a base plugin and a selection of add-ons will get you 90% of the functionality you need. However, that missing 10% could be a big deal.

If a companion plugin doesn’t cover this, you might have to either look elsewhere or build it yourself. That could lead to some unexpected issues when it comes to both compatibility and cost. Short of those options, a lack of that one piece of functionality can result in a long wait in hopes of it being added in at a later date.

Unofficial Add-Ons May Not Keep Pace

Plugins are updated with new features and bugfixes all the time. Sometimes, those updates can be major – and that poses a risk when using unofficial add-ons built by community members. It could mean that updating the base plugin means that you have to abandon a particular add-on.

One way to avoid this potential issue is to stick with official add-ons only. If you do utilize those from unofficial sources, look for plugins that are frequently updated. They are more likely to adapt to any major upgrades.

A Compelling Option

In the right situation, a WordPress plugin with its own ecosystem can be your best option. This is especially so in cases when you are building a website in which a plugin fulfills the core part of your mission.

For instance, an eCommerce site will want to use a shopping cart that can be expanded to meet the specific requirements of the store. This provides the best opportunity for future growth and will help you avoid a costly switch later on.

Of course, there are some potential negatives to consider. But with some due diligence, you may just find a collection of plugins that will successfully power your WordPress website for years to come.