Webmail is a robust IMAP-based email service and the latest exciting addition to WPMU DEV’s all-in-one WordPress management platform product suite.

In this comprehensive guide, we show you how to get started with Webmail, how to use its features, and how to resell professional business email to clients. We also provide information on the benefits of offering IMAP-based email services for WPMU DEV platform users and resellers.

Read the full article to learn all about Webmail or click on one of the links below to jump to any section:

- Overview of Webmail

- Getting Started with Webmail

- Managing Your Emails

- Additional Email Management Features

- Reseller Integration

- Email Protocols – Quick Primer

- Professional Business Email For Your Clients

Overview of Webmail

In addition to our current email hosting offerings, Webmail is a standalone service for Agency plan members that allows for greater flexibility in email account creation.

WPMU DEV’s Webmail:

- Is affordably priced

- Offers a superior email service with high standards of quality and reliability.

- Does not require a third-party app to work.

- Lets you set up email accounts on any domain you own or manage, whether it’s a root domain like mydomain.com or a subdomain such as store.mydomain.com.

- Lets you provide clients with professional business email no matter where their domain is hosted (or whether the domain is associated with a site in your Hub or not)

- Can be accessed from any device, even directly from your web browser.

- Can be white labeled and resold under your own brand with Reseller.

Read more about the benefits of using Webmail.

Let’s show you now how to set your clients up with email accounts and a fully-functional mailbox in just a few clicks, using any domain, and no matter where their domain is hosted.

Getting Started With Webmail

Webmail is very quick and easy to set up.

If you’re an Agency member, just head on over to The Hub.

Now, all you need to do is get acquainted with the latest powerful tool in your complete WordPress site management toolbox…

Webmail Manager

The Hub lets you create, manage, and access IMAP email accounts for any domain you own from one central location, even domains that are not directly associated with a site in your Hub.

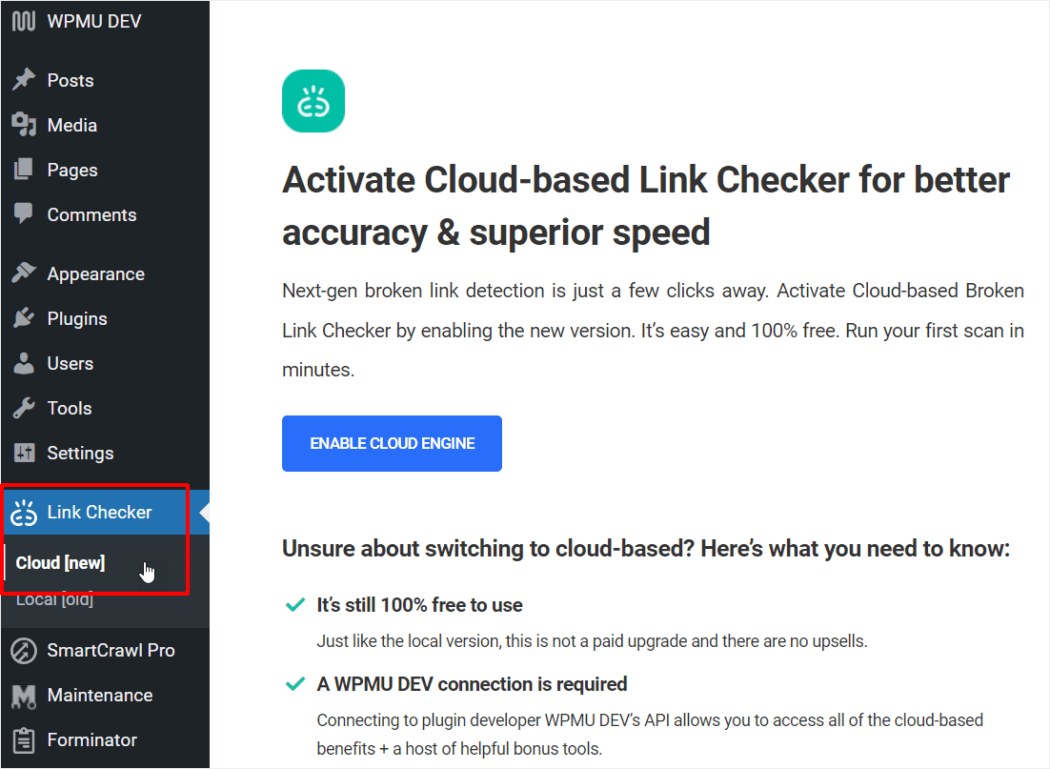

Click on Webmail on the main menu at the top of the screen…

This will bring you to the Webmail Overview screen.

If you haven’t set up an email account yet, you’ll see the screen below. Click on the “Create New Email” button to get started.

As mentioned earlier, Webmail gives you the choice of creating an email account from a domain you manage in The Hub, or a domain managed elsewhere.

For this tutorial, we’ll select a domain being managed in The Hub.

Select the domain you want to associate your email account with from the dropdown menu and click the arrow to continue.

Next, create your email address, choose a strong password, and click on the blue arrow button to continue.

You will see a payment screen displaying the cost of your new email address and billing start date. Click the button to make the payment and create your new email account.

Your new email account will be automatically created after payment has been successfully processed.

The last step to make your email work correctly is to add the correct DNS records.

Fortunately, if your site or domain are hosted with WPMU DEV, Webmail Manager can easily and automatically do this for you too!

Note: If your domain is managed elsewhere, you will need to copy and manually add the DNS records at your registrar or DNS manager (e.g. Cloudflare).

Click on the View DNS Records button to continue.

This will bring up the DNS Records screen.

As our example site is hosted with WPMU DEV, all you need to do is click on the ADD DNS Records button and your records will be automatically created and added to your email account.

After completing this step, wait for the DNS records to propagate successfully before verifying the DNS.

You can use an online tool like https://dnschecker.org to check the DNS propagation status.

Note: DNS changes can take 24-48 hours to propagate across the internet, so allow some time for DNS propagation to occur, especially if the domain is hosted elsewhere.

Click the Verify DNS button to check if the DNS records have propagated.

If your DNS records have propagated successfully, you will see green ticks for all records under the DNS Status column.

Your email account is now fully set up and ready to use.

Repeat the above process to create and add more emails.

Now that you know how to create a new email account, let’s look at how to manage your emails effectively.

Managing Your Emails

If you have set up one or more email accounts, navigate to the Webmail Manager screen any time to view a list of all connected domains, their status, number of email accounts associated with each domain, and additional options.

To manage your email accounts, click on a domain name or select Manage Domain Email from the Options dropdown menu (the vertical ellipsis icon).

This opens up the email management section for the selected domain.

The Email Accounts tab lists all the existing email accounts for that domain, status and creation date information, plus additional email management options that we’ll explore in a moment.

Email accounts can have the following statuses: active, suspended, or disabled.

Active accounts can send and receive emails, provided DNS records have been set up and propagated correctly.

Suspended accounts occur if email activity is in violation of our webmail provider’s email sending policy.

A disabled account (see further below) only disables the sending and receiving of emails and webmail access for that email account. It does not affect billing.

Note: Unless you delete the account, you will still be charged for a disabled email account.

Before we discuss managing individual email accounts, let’s look at other main features of Webmail Manager.

Email Forwarding

Email forwarding automatically redirects emails sent to one email address to another designated email address. It allows users to receive emails sent to a specific address without having to check multiple accounts. For example, emails sent to info@yourcompany.tld can be automatically forwarded to john@yourcompany.tld.

Every email account includes 10 email forwarders. This allows you to automatically forward emails to multiple addresses simultaneously (e.g. john@yourcompany.tld, accounts@yourcompany.tld, etc.).

To activate email forwarding hover over the arrow icon and turn its status to On and then click on Manage Email Forwarding to set up email forwarders.

This will bring up the Email Forwarding tab. Here, you can easily add, delete, and edit email forwarders.

If no email forwarders exist for your email account, click the Create Email Forwarder button to create the first one.

In the Add Email Forwarder screen, enter the forwarding email address where you would like incoming email messages to redirect to and click Save.

As stated, you can add multiple forwarding email addresses to each email account (up to 10).

Webmail Login

With Webmail, all emails are stored on our servers, so in addition to being able to access and view emails on any device, every webmail account includes a mailbox that can be accessed online directly via Webmail’s web browser interface.

There are several ways to log in and view emails.

Access Webmail From The Hub

To log into webmail directly via The Hub, you can go to the Email Account Management > Email Accounts screen of your domain, click the envelope icon next to the email account, and click on the Webmail Login link…

Or, if you are working inside an individual email account, just click on the Webmail Login link displayed in all of the account’s management screens…

This will log you directly into the webmail interface for that email account.

The Webmail interface should look familiar and feel intuitive to most users. If help using any of Webmail’s features is required, click the Help icon on the menu sidebar to access detailed help documentation.

Let’s look at other ways to access Webmail.

Access Webmail From The Hub Client

If you have set up your own branded client portal using The Hub Client plugin, your team members and clients can access and manage emails via Webmail with team user roles configured to give them access permissions and SSO (Single Sign-On) options enabled.

This allows users to seamlessly log into an email account from your client portal without having to enter login credentials.

Direct Access URL

Another way to log into Webmail is via Direct Access URL.

To access webmail directly from your web browser for any email account, enter the following URL into your browser exactly as shown here: https://webmail.yourwpsite.email/, then enter the email address and password, and click “Login.”

Note: The above example uses our white labeled URL address webmail.yourwpsite.email to log into Webmail via a web browser. However, you can also brand your webmail accounts with your own domain so users can access their email from a URL like webmail.your-own-domain.tld.

For more details on how to set up your own branded domain URL, see our Webmail documentation.

Email Aliases

An email alias is a virtual email address that redirects emails to a primary email account. It serves as an alternative name for a single mailbox, enabling users to create multiple email addresses that all direct messages to the same inbox.

For instance, the following could all be aliases for the primary email address john@mysite.tld:

- sales@mysite.tld

- support@mysite.tld

- info@mysite.tld

Webmail lets you create up to 10 email aliases per email account.

To create an alias for an email account, click on the vertical ellipsis icon and select Add Alias.

Enter the alias username(s) you would like to create in the Add Alias modal and click Save.

Emails sent to any of these aliases will be delivered to your current email account.

Additional Email Management Features

In addition to the features and options found in the Email Accounts tab that we have just discussed, Webmail lets you manage various options and settings for each individual email account.

Let’s take a brief look at some of these options and settings.

Email Information

To manage an individual email account:

- Click on The Hub > Webmail to access the Email Accounts tab

- Click on the domain you have set up to use Webmail

- Click on the specific email account (i.e. the email address) you wish to manage.

Click on the Webmail management screens to access and manage individual email accounts.

The Email Information tab lets you edit your current email account and password and displays important information, such as status, creation date (this is the date your billing starts for this email account), storage used, and current email send limit.

In addition to the Email Information tab, you can click on the Email Forwarding tab to manage your email forwarders and the Email Aliases tab to manage your email aliases for your email account.

Note: Newly created accounts have send limits set up to prevent potential spamming and account suspension. These limits gradually increase over a two-week period, allowing email accounts to send up to 500 emails every 24 hours.

Coming soon, you will also be able to add more storage to your email accounts if additional space is required.

Now that we have drilled down and looked at all the management tabs for an individual email account, let’s explore some additional features of the Webmail Manager.

Go back to The Hub > Webmail and click on one of the email accounts you have set up.

DNS Records

Click on the DNS Records tab to view the DNS Records of your email domain.

Note: The DNS Records tab is available to team members and client custom roles, so team members and clients can access these if you give them permission.

Configurations

Click on the Configurations tab to view and download configuration settings that allow you to set up email accounts in applications other than Webmail.

The Configurations tab is also available for both team member and client custom roles.

Client Association

If you want to allow clients to manage their own email accounts, you will need to set up your client account first, assign permissions to allow the client to view Webmail, then link the client account with the email domain in the Client Association tab.

After setting up your client in The Hub, navigate to the Client Association tab (The Hub > Webmail > Email Domain) and click on Add Client.

Select the client from the dropdown menu and click Add.

Notes:

- When you associate a client with an email domain, SSO for the email domain is disabled in The Hub. However, your client will be able to access Webmail login via The Hub Client plugin.

- The Client Association tab is only made available for team member custom roles.

Reseller Integration

We’re currently working on bringing full auto-provisioning of emails to our Reseller platform. Until this feature is released, you can manually resell emails to clients and bill them using the Clients & Billing tool.

Once Webmail has been fully integrated with our Reseller platform, you will be able to rebrand Webmail as your own and resell everything under one roof: hosting, domains, templates, plugins, expert support…and now business emails!

If you need help with Reseller, check out our Reseller documentation.

Congratulations! Now you know how to set up, manage, and resell Webmail in your business as part of your digital services.

Email Protocols – Quick Primer

WPMU DEV offers the convenience of using both IMAP and POP3 email.

Not sure what IMAP is, how it works, or how IMAP differs from POP3? Then read below for a quick primer on these email protocols.

What is IMAP?

IMAP (Internet Message Access Protocol) is a standard protocol used to retrieve emails from a mail server. It allows users to access their emails from multiple devices like a phone, laptop, or tablet, because it stores emails on the server, rather than downloading them to a single device.

Since emails are managed and stored on the server, this reduces the need for extensive local storage and allows for easy backup and recovery.

Additional points about IMAP:

- Users can organize emails into folders, flag them for priority, and save drafts on the server.

- It supports multiple email clients syncing with the server, ensuring consistent message status across devices.

- IMAP operates as an intermediary between the email server and client, enabling remote access from any device.

- When users read emails via IMAP, they’re viewing them directly from the server without downloading them locally.

- IMAP downloads messages only upon user request, enhancing efficiency compared to other protocols like POP3.

- Messages persist on the server unless deleted by the user.

- IMAP uses port 143, while IMAP over SSL/TLS uses port 993 for secure communication.

The advantages of using IMAP include the following:

- Multi-Device Access: IMAP supports multiple logins, allowing users to connect to the email server from various devices simultaneously.

- Flexibility: Unlike POP3, IMAP enables users to access their emails from different devices, making it ideal for users who travel frequently or need access from multiple locations.

- Shared Mailbox: A single IMAP mailbox can be shared by multiple users, facilitating collaboration and communication within teams.

- Organizational Tools: Users can organize emails on the server by creating folders and subfolders, enhancing their efficiency in managing email correspondence.

- Email Functions Support: IMAP supports advanced email functions such as search and sort, improving user experience and productivity.

- Offline Access: IMAP can be used offline, allowing users to access previously downloaded emails even without an internet connection.

There are some challenges to setting up and running your own IMAP service, which is why using a solution like WPMU DEV’s Webmail is highly recommended:

- Hosting an IMAP service can be resource-intensive, requiring more server storage and bandwidth to manage multiple connections and the storage of emails.

- IMAP requires implementing SSL encryption to ensure secure email communication.

- Smaller businesses might find it challenging to allocate the necessary IT resources for managing an IMAP server efficiently.

IMAP vs POP3: What’s The Difference?

IMAP and POP3 are both client-server email retrieval protocols, but they are two different methods for accessing email messages from a server.

IMAP is designed for modern email users. It allows users to access your email from multiple devices because it keeps their emails on the server. When users read, delete, or organize their emails, these changes are synchronized across all devices.

For example, if you read an email on your phone, it will show as being read on your laptop as well.

POP3, on the other hand, is simpler and downloads emails from the server to a single device, then usually deletes them from the server. This means if users access their emails from a different device, they won’t see the emails that were downloaded to the first device.

For instance, if you download an email via POP3 on your computer, that email may not be accessible on your phone later.

Here are some of the key differences between IMAP and POP3:

Storage Approach

- IMAP: Users can store emails on the server and access them from any device. It functions more like a remote file server.

- POP3: Emails are saved in a single mailbox on the server and downloaded to the user’s device when accessed.

Access Flexibility

- IMAP: Allows access from multiple devices, enabling users to view and manage emails consistently across various platforms.

- POP3: Emails are typically downloaded to one device and removed from the server.

Handling of Emails

- IMAP: Maintains emails on the server, allowing users to organize, flag, and manage them remotely.

- POP3: Operates as a “store-and-forward” service, where emails are retrieved and then removed from the server.

In practice, IMAP is more suited for users who want to manage their emails from multiple devices or locations, offering greater flexibility and synchronization. POP could be considered for situations where email access is primarily from a single device, or there is a need to keep local copies of emails while removing them from the server to save space.

Essentially, IMAP prioritizes remote access and centralized email management on the server, while POP3 focuses on downloading and storing emails locally.

Professional Business Email For Your Clients

Integrating email hosting, particularly IMAP, with web hosting to create a seamless platform for managing client websites and emails under one roof is challenging, costly, and complex.

With WPMU DEV’s Webmail, you can enhance your email management capabilities and provide clients with affordable and professional business email no matter where their domain is hosted that is easy-to-use and does not require a third-party app.

Note: If you don’t require the full features of IMAP email for a site hosted with WPMU DEV, we also offer the option to create POP3 email accounts with our hosted email. These accounts can be linked to any email client of your choice, ensuring flexibility and convenience.

If you’re yet to set up a WPMU DEV account, we encourage you to become an Agency member. It’s 100% risk-free and includes everything you need to manage your clients and resell services like hosting, domains, emails, and more, all under your own brand.

If you’re already an Agency member, then head over to your Hub and click on Webmail to get started. If you need any help, our support team is available 24×7 (or ask our AI assistant) and you can also check out our extensive webmail documentation.