1. Choice of Programming Language

- The language depends on the type of cluster. A cluster can comprise of two modes, i.e., Standard and High Concurrency. A High Concurrency cluster supports R, Python, and SQL, whereas a Standard cluster supports Scala, Java, SQL, Python, and R.

- Spark is developed in Scala and is the underlying processing engine of Databricks. Scala performs better than Python and SQL. Hence, for the Standard cluster, Scala is the recommended language for developing Spark jobs.

2. ADF for Invoking Databricks Notebooks

- Eliminate Hardcoding: In certain scenarios, Databricks requires some configuration information related to other Azure services such as storage account name, database server name, etc. The ADF pipeline uses pipeline variables for storing the configuration details. During the Databricks notebook invocation within the ADF pipeline, the configuration details are transferred from pipeline variables to Databricks widget variables, thereby eliminating hardcoding in the Databricks notebooks.

![Databricks Notebook Settings]()

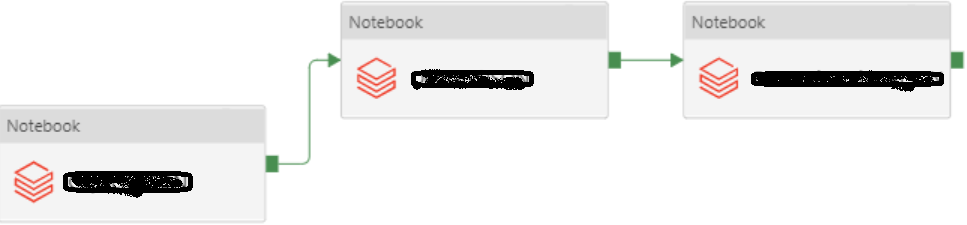

- Notebook Dependencies: It is relatively easier to establish notebook dependencies in ADF than in Databricks itself. In case of failure, debugging a series of notebook invocations in an ADF pipeline is convenient.

- Cheap: When a Notebook is invoked through ADF, the Ephemeral job cluster pattern is used for processing the spark job because the lifecycle of the cluster is tied to the job lifecycle. These short-life clusters cost lesser than the clusters which are created using the Databricks UI.

3. Using Widget Variables

The configuration details are made accessible to the Databricks code through the widget variables. The configuration data is transferred from pipeline variable to widget variables when the notebook is invoked in the ADF pipeline. During the development phase, to model the behavior of a notebook run by ADF, widget variables are manually created using the following line of code.