So why did we build our messaging service using Apache Pulsar?

At DataStax, our mission is to empower developers to build cloud-native distributed applications by making cloud-agnostic, high-performance messaging technology easily available to everyone. Developers want to write distributed applications or microservices but don’t want the hassle of managing complex message infrastructure or getting locked into a particular cloud vendor. They need a solution that just works. Everywhere.

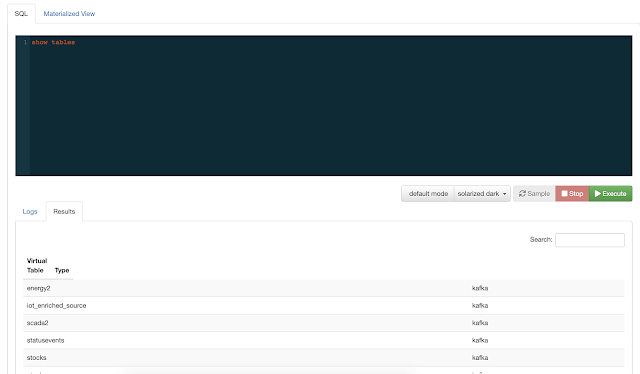

KSQL is a SQL streaming engine for Apache Kafka. It provides an easy-to-use, yet powerful interactive SQL interface for stream processing on Kafka, without the need to write code in a programming language like Java or Python. KSQL is scalable, elastic, and fault-tolerant. It supports a wide range of streaming operations, including data filtering, transformations, aggregations, joins, windowing, and sessionization.

KSQL is a SQL streaming engine for Apache Kafka. It provides an easy-to-use, yet powerful interactive SQL interface for stream processing on Kafka, without the need to write code in a programming language like Java or Python. KSQL is scalable, elastic, and fault-tolerant. It supports a wide range of streaming operations, including data filtering, transformations, aggregations, joins, windowing, and sessionization.