An Introduction to Graph Data

This article is an excerpt from the book Machine Learning with PyTorch and Scikit-Learn from the best-selling Python Machine Learning series, updated and expanded to cover PyTorch, transformers, and graph neural networks.

Broadly speaking, graphs represent a certain way we describe and capture relationships in data. Graphs are a particular kind of data structure that is nonlinear and abstract. And since graphs are abstract objects, a concrete representation needs to be defined so the graphs can be operated on. Furthermore, graphs can be defined to have certain properties that may require different representations. Figure 1 summarizes the common types of graphs, which we will discuss in more detail in the following subsections:

Modernizing Computer Vision With Deep Neural Networks

As studied earlier, computer networks are one of the most popular and well-researched automation topics over the last many years. But along with advantages and uses, computer vision has its challenges in the department of modern applications, which deep neural networks can address quickly and efficiently.

1. Network Compression

With the soaring demand for computing power and storage, it is challenging to deploy deep neural network applications. Consequently, while implementing the neural network model for computer vision, a lot of effort and work is put in to increase its precision and decrease the complexity of the model.

Online Machine Learning: Into the Deep

Introduction

Online Learning is a branch of Machine Learning that has obtained a significant interest in recent years thanks to its peculiarities that perfectly fit numerous kinds of tasks in today’s world. Let’s dive deeper into this topic.

What Exactly Is Online Learning?

In traditional machine learning, often called batch learning, the training data is first gathered in its entirety and then a chosen machine learning model is trained on said data: the resulting model is then deployed to make predictions on new unseen data.

Graph-Based Recommendation System With Milvus

Background

A recommendation system (RS) can identify user preferences based on their historical data and suggest products or items to them accordingly. Companies will enjoy considerable economic benefits from a well-designed recommendation system.

There are three elements in a complete set of recommendation systems: user model, object model, and the core element—recommendation algorithm. Currently, established algorithms include collaborative filtering, implicit semantic modeling, graph-based modeling, combined recommendation, and more. In this article, we will provide some brief instructions on how to use Milvus to build a graph-based recommendation system.

How to Integrate HUAWEI ML Kit’s Image Super-Resolution Capability

Have you ever been sent compressed images that have poor definition? Even when you zoom in, the image is still blurry. I recently received a ZIP file of travel photos from a trip I went on with a friend. After opening it, I found to my dismay that each image was either too dark, too dim, or too blurry. How am I going to show off with such terrible photos? So, I sought help from the Internet, and luckily, I came across HUAWEI ML Kit's image super-resolution capability. The amazing thing is that this SDK is free of charge and can be used with all Android phones.

Background

ML Kit's image super-resolution capability is backed by a deep neural network and provides two super-resolution capabilities for mobile apps:

A Friendly Introduction to Graph Neural Networks

Graph Neural Networks Explained

Graph neural networks (GNNs) belong to a category of neural networks that operate naturally on data structured as graphs. Despite being what can be a confusing topic, GNNs can be distilled into just a handful of simple concepts.

Starting With Recurrent Neural Networks (RNNs)

We’ll pick a likely familiar starting point: recurrent neural networks. As you may recall, recurrent neural networks are well-suited to data that are arranged in a sequence, such as time series data or language. The defining feature for a recurrent neural network is that the state of an RNN depends not only on the current inputs but also on the network’s previous hidden state. There have been many improvements to RNNs over the years, generally falling under the category of LSTM-style RNNs with a multiplicative gating function between the current and previous hidden state of the model. A review of LSTM variants and their relation to vanilla RNNs can be found here.

What Can You Do With the OpenAI GPT-3 Language Model?

Exploring GPT-3: A New Breakthrough in Language Generation

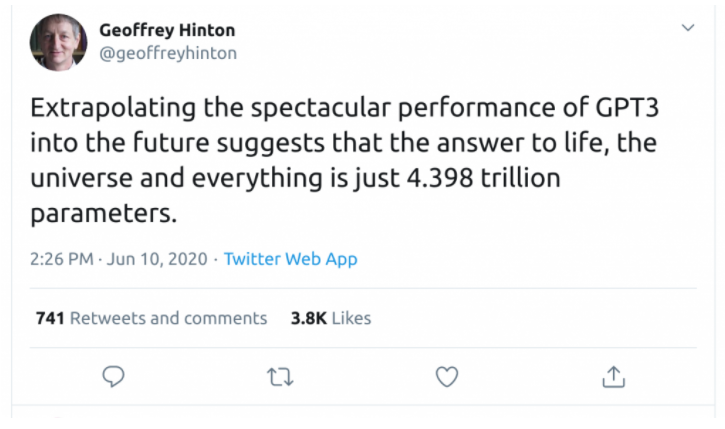

Substantial enthusiasm surrounds OpenAI’s GPT-3 language model, recently made accessible to beta users of the “OpenAI API.”

Forward and Back-Propagation Programming Technique/Steps to Train an Artificial Neural Net

This write-up is especially for those who want to try their hands at coding an Artificial Neural Net. How it is done mathematically doesn’t need an explanation from someone like me who is a programmer and not a scientist or a researcher. There are numerous training videos that you can go through and learn. I have gone through Prof Patrick Winston’s class as part of MIT OpenCourseWare and understood how the feed-forward and back-propagation technique works.

Through this article, I will explain the steps that you will need to follow to build a fully configurable ANN program (with N number of input features, N number of hidden layers, N number of neurons in each hidden layer, N number of output neurons). I would encourage to write your own custom program following the steps. As long as we adhere to the best practices of programming, test its efficiency, performance, we are good to go.

How AI Is Capable of Defeating Biometric Authentication Systems

Biometrics Authentication Systems and artificial intelligence (AI) are among the most powerful weapons for fighting fraudsters in payment cards and the e-commerce industry. That was an important note from a recent presentation by MasterCard’s vice president for big data consulting.

In his recent virtual presentation on “Unlock the Power of Data” at Dell Technologies, Nick offered an inside preview of how MasterCard uses machine learning and artificial intelligence combined with biometric technology to make card transactions smooth and frictionless with an end to end robust security.

5 Types of LSTM Recurrent Neural Networks and What to Do With Them

The Primordial Soup of Vanilla RNNs and Reservoir Computing

Using past experience for improved future performance is a cornerstone of deep learning and of machine learning in general. One definition of machine learning lays out the importance of improving with experience explicitly:

A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E.

Transfer Learning Made Easy: Coding a Powerful Technique

Artificial Intelligence for the Average User

Artificial intelligence (AI) is shaping up to be the most powerful and transformative technology to sweep the globe and touch all facets of life — economics, healthcare, finance, industry, socio-cultural interactions, etc. — in an unprecedented manner. This is even more important with developments in transfer learning and machine learning capabilities.

You may also like: Transfer Learning: How to Classify Images Using TensorFlow Machine Learning

Already, we are using AI technologies on a daily basis, and it is impacting our lives and choices whether we consciously know it or not. From our Google search and Navigation, Netflix movie recommendations, Amazon purchase suggestions, voice assistants for daily tasks like Siri or Alexa, Facebook community building, medical diagnoses, credit score calculations, and mortgage decision making, etc., AI is only going to grow in adoption.

Build Your First Neural Network With Eclipse Deeplearning4j

In the previous article, we had an introduction to deep learning and neural networks. Here, we will explore how to design a network depending on the task we want to solve.

There is indeed an incredibly high number of parameters and topology choices to deal with when working with neural networks. How many hidden layers should I set up? What activation function should they use? What are good values for the learning rate? Should I use a classical Multilayer Neural Network, a Convolutional Neural Network (CNN), a Recurrent Neural Network (RNN), or one of the other architectures available? These questions are just the tip of the iceberg when deciding to approach a problem with these techniques.

Positive Impact of Graph Technology and Neural Networks on Cybersecurity

Breaches on the Rise

The Equifax security breach was among the worst ever in terms of the number of people affected and the type of information breached. Information such as names, SSNs, birth dates and addresses are considered the Holy Grail of personal data that allows hackers to gain access to anyone’s personal, financial, and health records.

While frequent incidents of security breaches have brought enough anxiety in corporate America, it’s the complexity of managing cybersecurity and addressing unanswered questions that really have enterprises nervous.

Deep Learning and the Human Brain: Inspiration, Not Imitation

Artificial intelligence is the future. Structurally, artificial intelligence is perceived almost to be an individual entity influencing every technology. Machine learning is one of the sciences behind this entity, and deep learning is the engine that propels the science.

Deep learning transcends human ability to process a large volume of data. With a rush of data and the advent of faster GPUs and TPUs, deep learning is taking giant strides in the realm of image analysis, facial recognition, autonomous driving, etc.