4 Pitfalls To Be Aware of When Adopting a Hybrid Cloud

Companies making a beeline for cloud services is old news. The Flexera State of the Cloud Report 2021 revealed that the average company’s IT infrastructure setup already runs five different cloud mechanisms on which their data transfer, computing, and networking take place.

This means companies are already exposed to a combination of on-premises servers, public and private clouds. Cloud industry leaders, on being asked how they pulled off their move to the hybrid cloud in a survey by 451 Research, shared some interesting information:

Cloud Observability – Understand the Complex State of Your System

Cloud-based systemic optimization is central to the agility of a business in today’s post-COVID world. Whilst the accelerating trend to embrace the cloud is good, the key focus should lie in identifying and outmaneuvering any future uncertainties. Nobody expected to be forced to work from home, neither did some industries expect a volatile growth in traffic and demand. For example, Netflix saw a sharp 16 million spike in the number of new subscribers, highlighting the unprecedented growth in demand and the need for tactical systems to handle the same.

With the growing scale and complexity of systems architecture and new challenges being imposed, IT professionals are under high pressure to identify anomalies, derive solutions for the same, and ensure that they don’t arise in the future. To assure that, enterprises are looking at Observability.

Efficient Model Training in the Cloud with Kubernetes, TensorFlow, and Alluxio

Alibaba Cloud Container Service Team Case Study

This article presents the collaboration of Alibaba, Alluxio, and Nanjing University in tackling the problem of Deep Learning model training in the cloud. Various performance bottlenecks are analyzed with detailed optimizations of each component in the architecture. Our goal was to reduce the cost and complexity of data access for Deep Learning training in a hybrid environment, which resulted in an over 40% reduction in training time and cost.

1. New Trends in AI: Kubernetes-Based Deep Learning in The Cloud

Background

Artificial neural networks are trained with increasingly massive amounts of data, driving innovative solutions to improve data processing. Distributed Deep Learning (DL) model training can take advantage of multiple technologies, such as:

Cloud factory – Common architectural elements

In our previous article from this series we introduced a use case for a cloud factory, deploying multiple private clouds based on one code base using the principles of Infrastructure as Code.

In our previous article from this series we introduced a use case for a cloud factory, deploying multiple private clouds based on one code base using the principles of Infrastructure as Code.

The process was laid out how we've approached the use case and how portfolio solutions are the base for researching a generic architecture.

What Is Cloud Testing: Everything You Need To Know

Several years back, virtualization became a buzzword in the industry that flourished, evolved, and became famously known as Cloud computing. It involved sharing computing resources on different platforms, acted as a tool to improve scalability, and enabled effective IT administration and cost reduction. In other words, it includes sharing services like programming, infrastructure, platforms, and software on-demand on the cloud via the internet.

To verify the quality of everything that is rendered on the cloud environment, Cloud testing was performed running manual or automation testing or both. The entire process of Cloud Testing is operated online with the help of the required infrastructure. This primarily helps the QA teams to deal with the challenges like limited availability of devices, browsers, and operating systems. It also scrapes the geographical limitations, large infra setup, and process maintenance, making testing on the cloud easier, faster, and manageable.

Building Hybrid Multi-Cloud Event Mesh With Apache Camel and Kubernetes

Part 1 || Part 2 || Part 3

This blog is part two of my three blogs on how to build a hybrid multi-cloud event mesh with Camel. In this part, I am going over the more technical aspects. Going through how I set up the event mesh demo.

Why You Should Care About Service Meshes

Many developers wonder why they should care about service meshes. It's a question I'm asked often in my presentations at developer meetups, conferences, and hands-on workshops about microservices development with cloud-native architecture. My answer is always the same: "As long as you want to simplify your microservices architecture, it should be running on Kubernetes."

Concerning simplification, you probably also wonder why distributed microservices must be designed so complexly for running on Kubernetes clusters. As this article explains, many developers solve the microservices architecture's complexity with service mesh and gain additional benefits by adopting service mesh in production.

Top Trends in Cloud Computing in 2021

The popularity of cloud computing has transformed how companies do business. This transformation is inevitable. Industries around the world are deploying the latest technological innovations to help their businesses succeed. Since these solutions provide more flexibility and better data management options, companies are now realizing the significance of cloud computing for enterprise software development. According to Cisco, cloud data centers now process almost 94% of all workloads.

Top tech trends such as AI and IoT will continue to rise, but the most significant prediction will define how businesses overcome challenges in cloud computing. The future of cloud computing seems bright and possible — even industry experts believe that cloud computing is the "next big thing" that is at the forefront of all trending technologies. Total spending on the cloud will increase by 16% CAGR by 2026, which proves that cloud architecture is here to stay and will continue to facilitate businesses in achieving major goals.

Introduction To Google Anthos

Introduction

Google has put over a decade’s worth of work into formulating the newly released Anthos. Anthos is the bold culmination and expansion of many admired container products, including Linux Containers, Kubernetes, GKE (Google Kubernetes Engine), and GKE On-Prem. It has been over a year since the general availability of Anthos was announced, and the platform marks Google’s official step into enterprise data center management.

Anthos is the first next-gen tech multi-cloud platform supporter designed by a mainstream cloud provider. The platform’s unique selling point lies in application deployment capability across multiple environments, whether on-premises data centers, Google cloud, other clouds, or even existing Kubernetes clusters.

Reducing Data Latency With Geographically Distributed Databases

Introduction

Do you ever have those moments where you know you’re thinking faster than the app you’re using? You click something and have time to think “what’s taking so long?” It’s frustrating, to say the least, but it’s an all-too-common problem in modern applications. A driving factor of this delay is latency, caused by offloading processing from the app to an external server. More often than not, that external server is a monolithic database residing in a single cloud region. This article will dig into some of the existing architectures that cause this issue and provide solutions on how to resolve them.

Latency Defined

Before we get ahead of ourselves, let’s define “latency.” In a general sense, latency measures the duration between an action and a response. In user-facing applications, that can be narrowed down to the delay between when a user makes a request and when the application responds to a request. As a user, I don’t really care what is causing the delay resulting in a poor user experience; I just want it to go away. In a typical cloud application architecture, latency is caused by the internet and the time it takes to make requests back and forth from the user’s device and the cloud, referred to as internet latency. There is also processing time to consider; the time it takes to actually execute the request, which is referred to as operational latency. This article will focus on internet latency with a hint of operational latency. If you’re interested in other types of latency, TechTarget has a good deep dive into specifics of the term.

Geo-Distributed Data Lakes Explained

Geo-Distributed Data Lake is quite the mouthful. It’s a pretty interesting topic and I think you will agree after finishing this breakdown. There is a lot to say about how awesome it is to combine the flexibility of a data lake with the power of a distributed architecture, but I’ll get more into the benefits of both as a joint solution later. To start, I want to look at geo-distributed data lakes in two parts before we marry them together, for my non-developer brain that made the most sense! No time to waste, let’s kick things off with the one and only… data lakes.

It’s a Data LAKE, Not Warehouse!

It shouldn’t be a shock to the system to point out that we are living in a data-driven world going into 2021. Because of this, 'data lakes' are a fitting term for the amount of data companies are collecting. In my opinion, we could probably start calling them data oceans, expansive and seemingly never-ending. So what is a data lake exactly?

Oops, We’re Multi-Cloud: A Hitchhiker’s Guide to Surviving

Over the last few years, enterprises have adopted multi-cloud strategies in an effort to increase flexibility and choice and reduce vendor lock-in. According to Flexera's 2020 State of the Cloud Report most companies embrace multi-cloud, with 93% of enterprises having a multi-cloud strategy. In a recent Gartner survey of public cloud users, 81% of respondents said they are working with two or more providers. Multi-cloud makes so many things more complicated that you need a damn good reason to justify this. At Humanitec, we see hundreds of ops and platform teams a year, and I am often surprised that there are several valid reasons to go multi-cloud. I also observe that those teams which succeed are those that take the remodeling of workflows and tooling setups seriously.

What Is Multi-Cloud Computing?

Put simply, multi-cloud means: an application or several parts of it are running on different cloud-providers. These may be public or private, but typically include at least one or more public providers. It may mean data storage or specific services are running on one cloud providers and others on another. Your entire setup can run on different cloud providers in parallel. This is distinct from hybrid cloud services where one component is running on-premise and other parts of your application are running in the cloud.

What Does Being Cloud-Native Mean for a Database? [Webinar Sign-up]

Different cloud-native technologies and methodologies – including containers, Kubernetes, microservices, and service meshes – are all being used by organizations today, particularly enterprise developers and DevOps teams, 90% of which say cloud-native is somewhat or very important. As development and DevOps start to become "cloud-native," the database should too. But, what does being cloud-native mean for a database?

Learn how Couchbase maximizes cloud-native technologies' advantages by natively integrating using the Couchbase Autonomous Operator to deliver a geo-distributed database platform, providing a hybrid, multi-cloud database solution for organizations. Couchbase Autonomous Operator enables developers and DevOps teams to run Couchbase as a stateful database application next to their microservices applications on a Kubernetes platform, which frees organizations from cloud vendor lock-in and supports hybrid and multi-cloud strategies.

Why Is a Hybrid Cloud Approach the Best for Cloud?

A hybrid approach to cloud incorporates the benefits of public cloud services, enterprise-controlled private clouds, and the once-dominant dedicated hosting services. Hybrid approaches have been all the rage in the last couple of years as enterprises can avail the advantages of each service while minimizing risk levels. With a hybrid cloud, an enterprise’s data and resources are split between the three forms of storage.

Concern over security has been one of the biggest concerns for businesses that are contemplating a switch to hybrid cloud. After all, the path to public cloud computing can be quite scary for enterprises that are worried about potential threats in a public network spilling over to their network. Concerns over the security of public clouds have led to the rise in popularity of hybrid cloud models.

Hybrid Cloud Implementation for Enterprises

As part of the digital transformation and technology modernization, many large enterprises are looking at embarking on a transformation roadmap to enable a hybrid cloud framework to address the following:

- Modernize legacy applications and coexist with cloud-native applications.

- Automate technology operations to enable agility and speed of delivery.

- Optimize TCO to address increase workloads due to business demand.

- Improve service quality.

This article focuses on key enablers that will be required for building a hybrid-cloud architecture and operating model to support the enterprise goals and transformation strategy, especially with the accelerated and increased adoption of digital services in a post COVID world.

Webinar – Hybrid cloud for financial services series features payments architecture (slides)

Previously, I've shared that I'm presenting in an upcoming webinar on how to leverage hybrid cloud for deploying unified business application in the banking domain.

As it's a two part series, the first session was on November 17th, and the second was presented by me today.

Lessons Learned from the November AWS Outage

Context, Analysis, and Impact

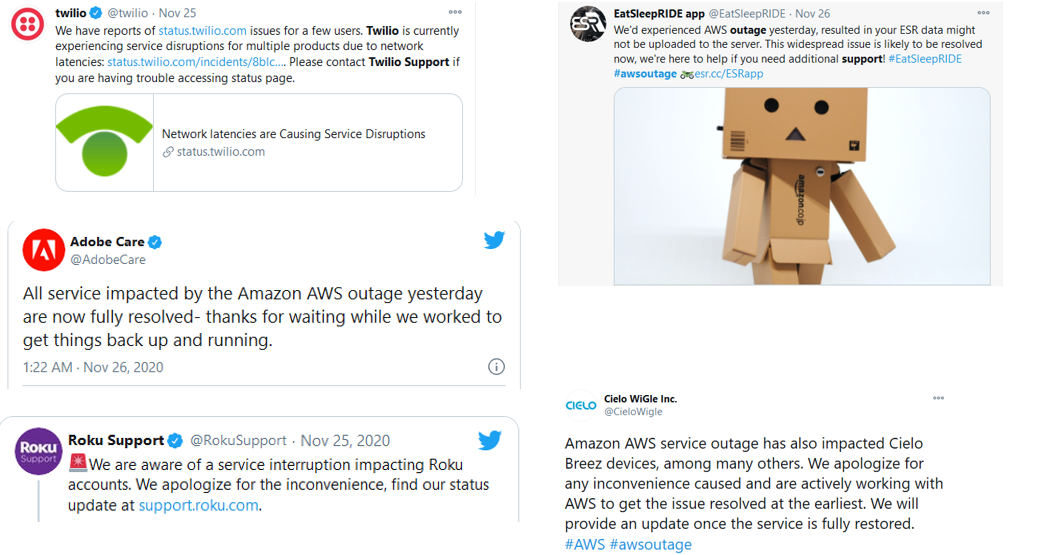

- Amazon’s internet infrastructure service experienced a multi-hour outage on Wednesday, November 25th, that affected a large portion of the internet.

- More than 50+ companies were impacted, including Roku, Adobe, Flickr, Twilio, Tribune Publishing, and Amazon’s smart security division, Ring, in its region covering the eastern U.S.

- Business impacts, as reported by The Washington Post, included:

- New account activation and the mobile app for streaming media service Roku became hampered.

- Target-owned Shipt delivery service could receive and process some orders, though it stated that it was taking steps to manage capacity because of the outage.

- Photo storage service Flickr tweeted that customers couldn’t log in or create an account because of the AWS outage.

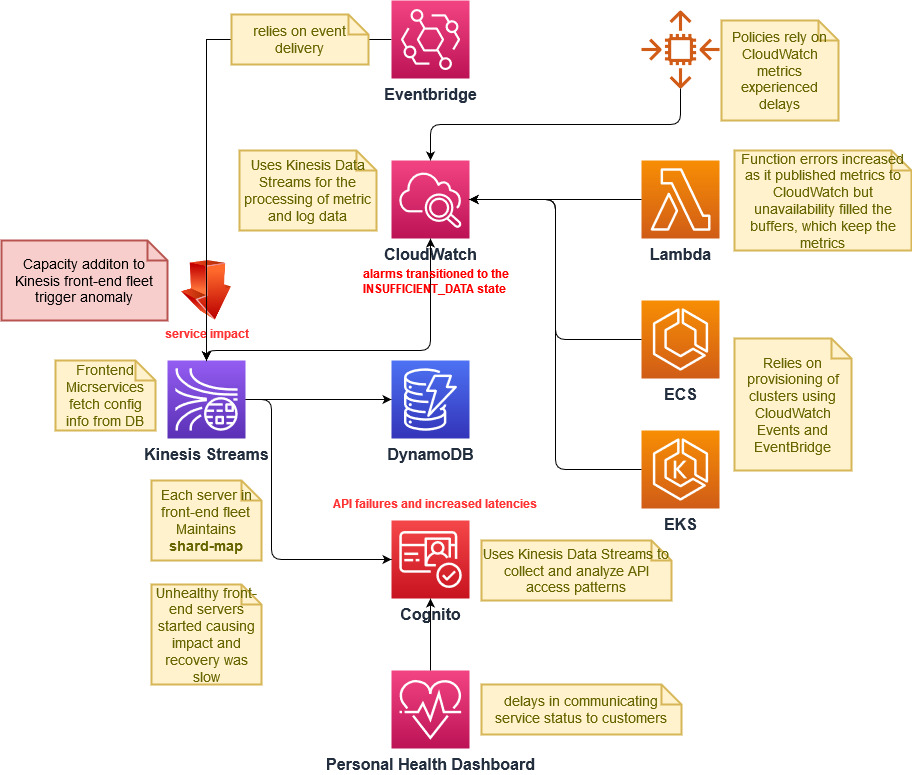

- Root Cause Analysis by AWS: It started with Amazon Kinesis but started impacting a long list of services. You can read the RCA document by AWS, which is also summarized below:

![Flowchart of AWS impact spread.]()

Lessons Learned

#1: Don't Put All Your Eggs in One Basket

- Using a single Cloud Service Provider can be counter-productive in these scenarios.

- Think and strategize for Hybrid-Cloud or Private Cloud; or Multi-Cloud, particularly during peak season.

#2: Hope for the Best and Plan for the Worst

- Don't just rely on a cloud provider's availability and multi-region fail-over strategy; build your own resiliency and disaster recovery approach.

- Practice disaster recovery in production or similar systems by using innovative approaches in active-active setup across the multi-cloud or hybrid-cloud scenarios.

#3: Monitoring and Observability Are Not Static

- Be innovative in exploring monitoring and observability patterns. For example, if AWS is reporting an outage on their status page, your monitoring system should get into action and inform the incident resolution team to start analyzing the impact.

- Keep ready the services dependency graph; though mostly supported by tools, you should keep it dynamic and prepared to assess the impact when it happens and map it to business functionalities to report it to your business team accurately.

#4: Invest in Emerging Techniques, like Chaos Engineering

- This failure indicates that even internet giants like AWS are still maturing in implementing practices like chaos engineering. So, start putting chaos engineering practices into the roadmap.

- For example, if a bulkhead pattern could have been utilized in the AWS outage scenario, the outage would have been limited to Kinesis services only.

To conclude, being proactive when outages occur, having a response team equipped for unplanned outages, and improving continuously from lessons learned along the way are essential techniques to help keep the impact limited. Also, having a multi-cloud or hybrid-cloud strategy is food for thought to keep the business running.