Before I career jumped into development, I did a bunch of motion graphics work in After Effects. But even with that background, I still found animating on the web pretty baffling.

Video graphics are designed within a specific ratio and then exported out. Done! But there aren’t any “export settings” on the web. We just push the code out into the world and our animations have to adapt to whatever device they land on.

So let’s talk responsive animation! How do we best approach animating on the wild wild web? We’re going to cover some general approaches, some GSAP-specific tips and some motion principles. Let’s start off with some framing…

How will this animation be used?

Zach Saucier’s article on responsive animation recommends taking a step back to think about the final result before jumping into code.

Will the animation be a module that is repeated across multiple parts of your application? Does it need to scale at all? Keeping this in mind can help determine the method in which an animation should be scaled and keep you from wasting effort.

This is great advice. A huge part of designing responsive animation is knowing if and how that animation needs to scale, and then choosing the right approach from the start.

Most animations fall into the following categories:

- Fixed: Animations for things like icons or loaders that retain the same size and aspect ratio across all devices. Nothing to worry about here! Hard-code some pixel values in there and get on with your day.

- Fluid: Animations that need to adapt fluidly across different devices. Most layout animations fall into this category.

- Targeted: Animations that are specific to a certain device or screen size, or change substantially at a certain breakpoint, such as desktop-only animations or interactions that rely on device-specific interaction, like touch or hover.

Fluid and targeted animations require different ways of thinking and solutions. Let’s take a look…

Fluid animation

As Andy Bell says: Be the browser’s mentor, not its micromanager — give the browser some solid rules and hints, then let it make the right decisions for the people that visit it.

(Here are the slides from that presentation.)

Fluid animation is all about letting the browser do the hard work. A lot of animations can easily adjust to different contexts just by using the right units from the start. If you resize this pen you can see that the animation using viewport units scales fluidly as the browser adjusts:

The purple box even changes width at different breakpoints, but as we’re using percentages to move it, the animation scales along with it too.

Animating layout properties like left and top can cause layout reflows and jittery ‘janky’ animation, so where possible stick to transforms and opacity.

We’re not just limited to these units though — let’s take a look at some other possibilities.

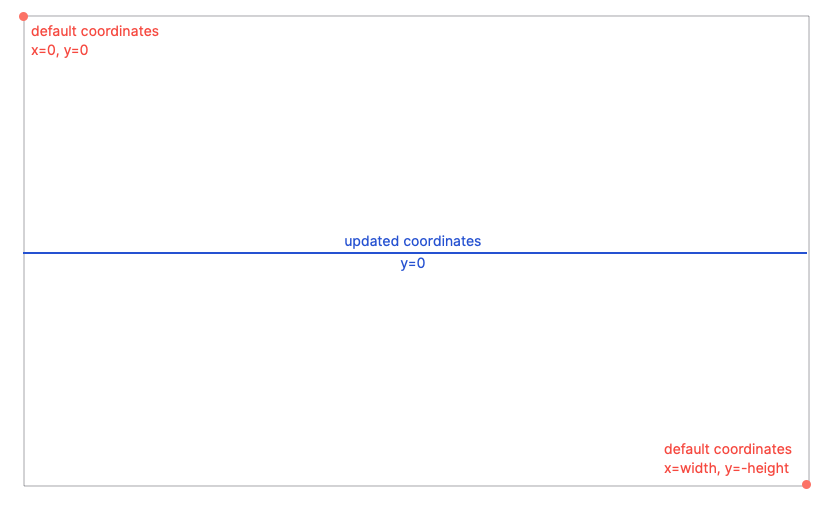

SVG units

One of the things I love about working with SVG is that we can use SVG user units for animation which are responsive out of the box. The clue’s in the name really — Scalable Vector Graphic. In SVG-land, all elements are plotted at specific coordinates. SVG space is like an infinite bit of graph paper where we can arrange elements. The viewBox defines the dimensions of the graph paper we can see.

viewBox="0 0 100 50”In this next demo, our SVG viewBox is 100 units wide and 50 units tall. This means if we animate the element by 100 units along the x-axis, it will always move by the entire width of its parent SVG, no matter how big or small that SVG is! Give the demo a resize to see.

Animating a child element based on a parent container’s width is a little tricker in HTML-land. Up until now, we’ve had to grab the parent’s width with JavaScript, which is easy enough when you’re animating from a transformed position, but a little fiddlier when you’re animating to somewhere as you can see in the following demo. If your end-point is a transformed position and you resize the screen, you’ll have to manually adjust that position. Messy… 🤔

If you do adjust values on resize, remember to debounce, or even fire the function after the browser is finished resizing. Resize listeners fire a ton of events every second, so updating properties on each event is a lot of work for the browser.

But, this animation speed-bump is soon going to be a thing of the past! Drum roll please… 🥁

Container Units! Lovely stuff. At the time I’m writing this, they only work in Chrome and Safari — but maybe by the time you read this, we’ll have Firefox too. Check them out in action in this next demo. Look at those little lads go! Isn’t that exciting, animation that’s relative to the parent elements!

This browser support data is from Caniuse, which has more detail. A number indicates that browser supports the feature at that version and up.

Desktop

| Chrome | Firefox | IE | Edge | Safari |

|---|---|---|---|---|

| 105 | No | No | 105 | 16.0 |

Mobile / Tablet

| Android Chrome | Android Firefox | Android | iOS Safari |

|---|---|---|---|

| 106 | No | 106 | 16.0 |

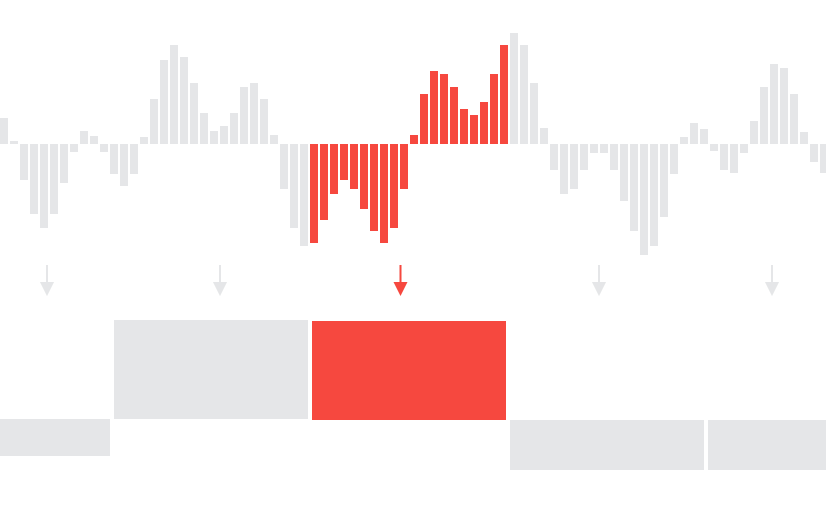

Fluid layout transitions with FLIP

As we mentioned earlier, in SVG-land every element is neatly placed on one grid and really easy to move around responsively. Over in HTML-land it’s much more complex. In order to build responsive layouts, we make use of a bunch of different positioning methods and layout systems. One of the main difficulties of animating on the web is that a lot of changes to layout are impossible to animate. Maybe an element needs to move from position relative to fixed, or some children of a flex container need to be smoothly shuffled around the viewport. Maybe an element even needs to be re-parented and moved to an entirely new position in the DOM.

Tricky, huh?

Well. The FLIP technique is here to save the day; it allows us to easily animate these impossible things. The basic premise is:

- First: Grab the initial position of the elements involved in the transition.

- Last: Move the elements and grab the final position.

- Invert: Work out the changes between the first and last state and apply transforms to invert the elements back to their original position. This makes it look like the elements are still in the first position but they’re actually not.

- Play: Remove the inverted transforms and animate to their faked first state to the last state.

Here’s a demo using GSAP’s FLIP plugin which does all the heavy lifting for you!

If you want to understand a little more about the vanilla implementation, head over to Paul Lewis’s blog post — he’s the brain behind the FLIP technique.

Fluidly scaling SVG

You got me… this isn’t really an animation tip. But setting the stage correctly is imperative for good animation! SVG scales super nicely by default, but we can control how it scales even further with preserveAspectRatio, which is mega handy when the SVG element’s aspect ratio and the viewBox aspect ratio are different. It works much in the same way as the background-position and background-size properties in CSS. The declaration is made up of an alignment value (background-position) and a Meet or Slice reference (background-size).

As for those Meet and Slice references — slice is like background size: cover, and meet is like background-size: contain.

preserveAspectRatio="MidYMax slice"— Align to the middle of the x-axis, the bottom of the y-axis, and scale up to cover the entire viewport.preserveAspectRatio="MinYMin meet"— Align to the left of the x-axis, the top of the y-axis, and scale up while keeping the entireviewBoxvisible.

Tom Miller takes this a step further by using overflow: visible in CSS and a containing element to reveal “stage left” and “stage right” while keeping the height restricted:

For responsive SVG animations, it can be handy to make use of the SVG viewbox to create a view that crops and scales beneath a certain browser width, while also revealing more of the SVG animation to the right and left when the browser is wider than that threshold. We can achieve this by adding overflow visible on the SVG and teaming it up with a

max-heightwrapper to prevent the SVG from scaling too much vertically.

Fluidly scaling canvas

Canvas is much more performant for complex animations with lots of moving parts than animating SVG or HTML DOM, but it’s inherently more complex too. You have to work for those performance gains! Unlike SVG that has lovely responsive units and scaling out of the box, <canvas> has to be bossed around and micromanaged a bit.

I like setting up my <canvas> so that it works much in the same way as SVG (I may be biased) with a lovely unit system to work within and a fixed aspect ratio. <canvas> also needs to be redrawn every time something changes, so remember to delay the redraw until the browser is finished resizing, or debounce!

George Francis also put together this lovely little library which allows you to define a Canvas viewBox attribute and preserveAspectRatio — exactly like SVG!

Targeted animation

You may sometimes need to take a less fluid and more directed approach to your animation. Mobile devices have a lot less real estate, and less animation-juice performance-wise than a desktop machine. So it makes sense to serve reduced animation to mobile users, potentially even no animation:

Sometimes the best responsive animation for mobile is no animation at all! For mobile UX, prioritize letting the user quickly consume content versus waiting for animations to finish. Mobile animations should enhance content, navigation, and interactions rather than delay it. Eric van Holtz

In order to do this, we can make use of media queries to target specific viewport sizes just like we do when we’re styling with CSS! Here’s a simple demo showing a CSS animation being handled using media queries and a GSAP animation being handled with gsap.matchMedia():

The simplicity of this demo is hiding a bunch of magic! JavaScript animations require a bit more setup and clean-up in order to correctly work at only one specific screen size. I’ve seen horrors in the past where people have just hidden the animation from view in CSS with opacity: 0, but the animation’s still chugging away in the background using up resources. 😱

If the screen size doesn’t match anymore, the animation needs to be killed and released for garbage collection, and the elements affected by the animation need to be cleared of any motion-introduced inline styles in order to prevent conflicts with other styling. Up until gsap.matchMedia(), this was a fiddly process. We had to keep track of each animation and manage all this manually.

gsap.matchMedia() instead lets you easily tuck your animation code into a function that only executes when a particular media query matches. Then, when it no longer matches, all the GSAP animations and ScrollTriggers in that function get reverted automatically. The media query that the animations are popped into does all the hard work for you. It’s in GSAP 3.11.0 and it’s a game changer!

We aren’t just constrained to screen sizes either. There are a ton of media features out there to hook into!

(prefers-reduced-motion) /* find out if the user would prefer less animation */

(orientation: portrait) /* check the user's device orientation */

(max-resolution: 300dpi) /* check the pixel density of the device */In the following demo we’ve added a check for prefers-reduced-motion so that any users who find animation disorienting won’t be bothered by things whizzing around.

And check out Tom Miller’s other fun demo where he’s using the device’s aspect ratio to adjust the animation:

Thinking outside of the box, beyond screen sizes

There’s more to thinking about responsive animation than just screen sizes. Different devices allow for different interactions, and it’s easy to get in a bit of a tangle when you don’t consider that. If you’re creating hover states in CSS, you can use the hover media feature to test whether the user’s primary input mechanism can hover over elements.

@media (hover: hover) {

/* CSS hover state here */

}Some advice from Jake Whiteley:

A lot of the time we base our animations on browser width, making the naive assumption that desktop users want hover states. I’ve personally had a lot of issues in the past where I would switch to desktop layout >1024px, but might do touch detection in JS – leading to a mismatch where the layout was for desktops, but the JS was for mobiles. These days I lean on hover and pointer to ensure parity and handle ipad Pros or windows surfaces (which can change the pointer type depending on whether the cover is down or not)

/* any touch device: */

(hover: none) and (pointer: coarse)

/* iPad Pro */

(hover: none) and (pointer: coarse) and (min-width: 1024px)I’ll then marry up my CSS layout queries and my JavaScript queries so I’m considering the input device as the primary factor supported by width, rather than the opposite.

ScrollTrigger tips

If you’re using GSAP’s ScrollTrigger plugin, there’s a handy little utility you can hook into to easily discern the touch capabilities of the device: ScrollTrigger.isTouch.

0– no touch (pointer/mouse only)1– touch-only device (like a phone)2– device can accept touch input and mouse/pointer (like Windows tablets)

if (ScrollTrigger.isTouch) {

// any touch-capable device...

}

// or get more specific:

if (ScrollTrigger.isTouch === 1) {

// touch-only device

}Another tip for responsive scroll-triggered animation…

The following demo below is moving an image gallery horizontally, but the width changes depending on screen size. If you resize the screen when you’re halfway through a scrubbed animation, you can end up with broken animations and stale values. This is a common speedbump, but one that’s easily solved! Pop the calculation that’s dependent on screen size into a functional value and set invalidateOnRefresh:true. That way, ScrollTrigger will re-calculate that value for you when the browser resizes.

Bonus GSAP nerd tip!

On mobile devices, the browser address bar usually shows and hides as you scroll. This counts as a resize event and will fire off a ScrollTrigger.refresh(). This might not be ideal as it can cause jumps in your animation. GSAP 3.10 added ignoreMobileResize. It doesn’t affect how the browser bar behaves, but it prevents ScrollTrigger.refresh() from firing for small vertical resizes on touch-only devices.

ScrollTrigger.config({

ignoreMobileResize: true

});Motion principles

I thought I’d leave you with some best practices to consider when working with motion on the web.

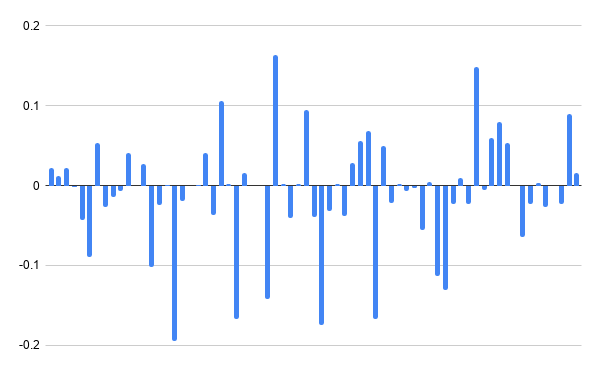

Distance and easing

A small but important thing that’s easy to forget with responsive animation is the relationship between speed, momentum, and distance! Good animation should mimic the real world to feel believable, and it takes a longer in the real world to cover a larger distance. Pay attention to the distance your animation is traveling, and make sure that the duration and easing used makes sense in context with other animations.

You can also often apply more dramatic easing to elements with further to travel to show the increased momentum:

For certain use cases it may be helpful to adjust the duration more dynamically based on screen width. In this next demo we’re making use of gsap.utils to clamp the value we get back from the current window.innerWidth into a reasonable range, then we’re mapping that number to a duration.

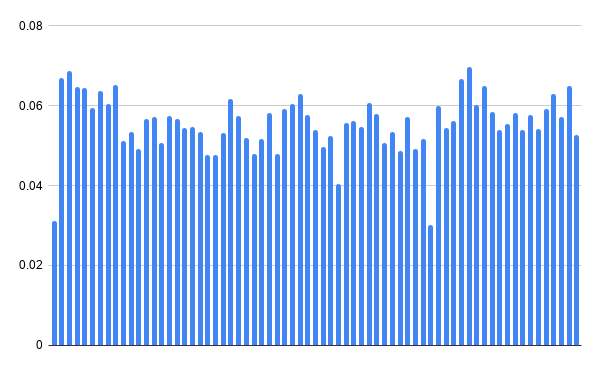

Spacing and quantity

Another thing to keep in mind is the spacing and quantity of elements at different screen sizes. Quoting Steven Shaw:

If you have some kind of environmental animation (parallax, clouds, trees, confetti, decorations, etc) that are spaced around the window, make sure that they scale and/or adjust the quantity depending on screen size. Large screens probably need more elements spread throughout, while small screens only need a few for the same effect.

I love how Opher Vishnia thinks about animation as a stage. Adding and removing elements doesn’t just have to be a formality, it can be part of the overall choreography.

When designing responsive animations, the challenge is not how to cram the same content into the viewport so that it “fits”, but rather how to curate the set of existing content so it communicates the same intention. That means making a conscious choice of which pieces content to add, and which to remove. Usually in the world of animation things don’t just pop in or out of the frame. It makes sense to think of elements as entering or exiting the “stage”, animating that transition in a way that makes visual and thematic sense.

And that’s the lot. If you have any more responsive animation tips, pop them in the comment section. If there’s anything super helpful, I’ll add them to this compendium of information!

Addendum

One more note from Tom Miller as I was prepping this article:

I’m probably too late with this tip for your responsive animations article, but I highly recommend “finalize all the animations before building”. I’m currently retrofitting some site animations with “mobile versions”. Thank goodness for

gsap.matchMedia… but I sure wish we’d known there’d be separate mobile layouts/animations from the beginning.

I think we all appreciate that this tip to “plan ahead” came at the absolute last minute. Thanks, Tom, and best of luck with those retrofits.

Responsive Animations for Every Screen Size and Device originally published on CSS-Tricks, which is part of the DigitalOcean family. You should get the newsletter.