Azure Synapse Analytics is a new product in the Microsoft Azure portfolio. It brings a whole new layer of control plane over well-known services as SQL Warehouse (rebranded to SQL Provisioned Pool), integrated Data Factory Pipelines, and Azure Data Lake Storage, as well as add new components such as Serverless SQL and Spark Pools. Integrated Azure Synapse Workspace helps handle security and protection of data in one place for all data lake, data analytics, and warehousing needs, but also requires learning some new concepts. At GFT, working with financial institutions all over the world, we pay particular attention to the security aspects of solutions that we provide to our customers. Synapse Analytics is a welcome new tool in this area.

The New Workspace Portal

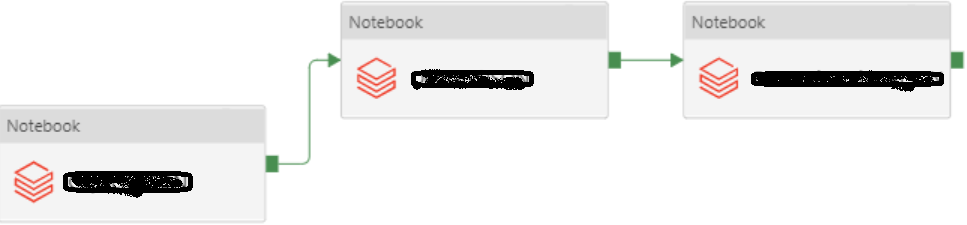

The first visible difference, when compared to other services, is that Synapse Analytics has a separate Workspace: https://web.azuresynapse.net/ that provides access to code, notebooks, SQL, pipelines, monitoring, and management panels. The portal is available on the public Internet using Azure AD Access controls for controlling access to any Synapse Analytics instance in any tenant that we have access to. However, Synapse Analytics introduces a new way to connect to the portal from Internet-isolated, on-premises networks, and offices using Private Link Hubs. Compared to Private Links that protect access to services and databases, this solution is used for routing traffic to a web portal. In conjunction with the Azure AD Conditional Access policy, the new Synapse Analytics Workspace can be protected with network and authentication policies.