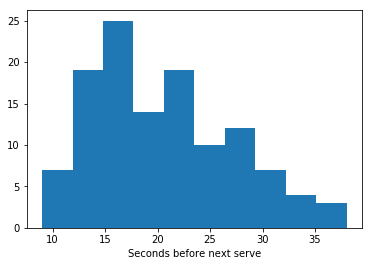

Let's say that we want to study the time between the end of a marked point and next serve in a tennis game. After gathering our data, the first thing that we can do is to draw a histogram of the variable that we are interested in:

import pandas as pd

import matplotlib.pyplot as plt

url = 'https://raw.githubusercontent.com/fivethirtyeight'

url += '/data/master/tennis-time/serve_times.csv'

event = pd.read_csv(url)

plt.hist(event.seconds_before_next_point, bins=10)

plt.xlabel('Seconds before next serve')

plt.show()