Last time we met, I introduced you to the View Transitions API. We started with a simple default crossfade transition and applied it to different use cases involving elements on a page transitioning between two states. One of those examples took the basic idea of adding products to a shopping cart on an e-commerce site and creating a visual transition that indicates an item added to the cart.

The View Transitions API is still considered an experimental feature that’s currently supported only in Chrome at the time I’m writing this, but I’m providing that demo below as well as a video if your browser is unable to support the API.

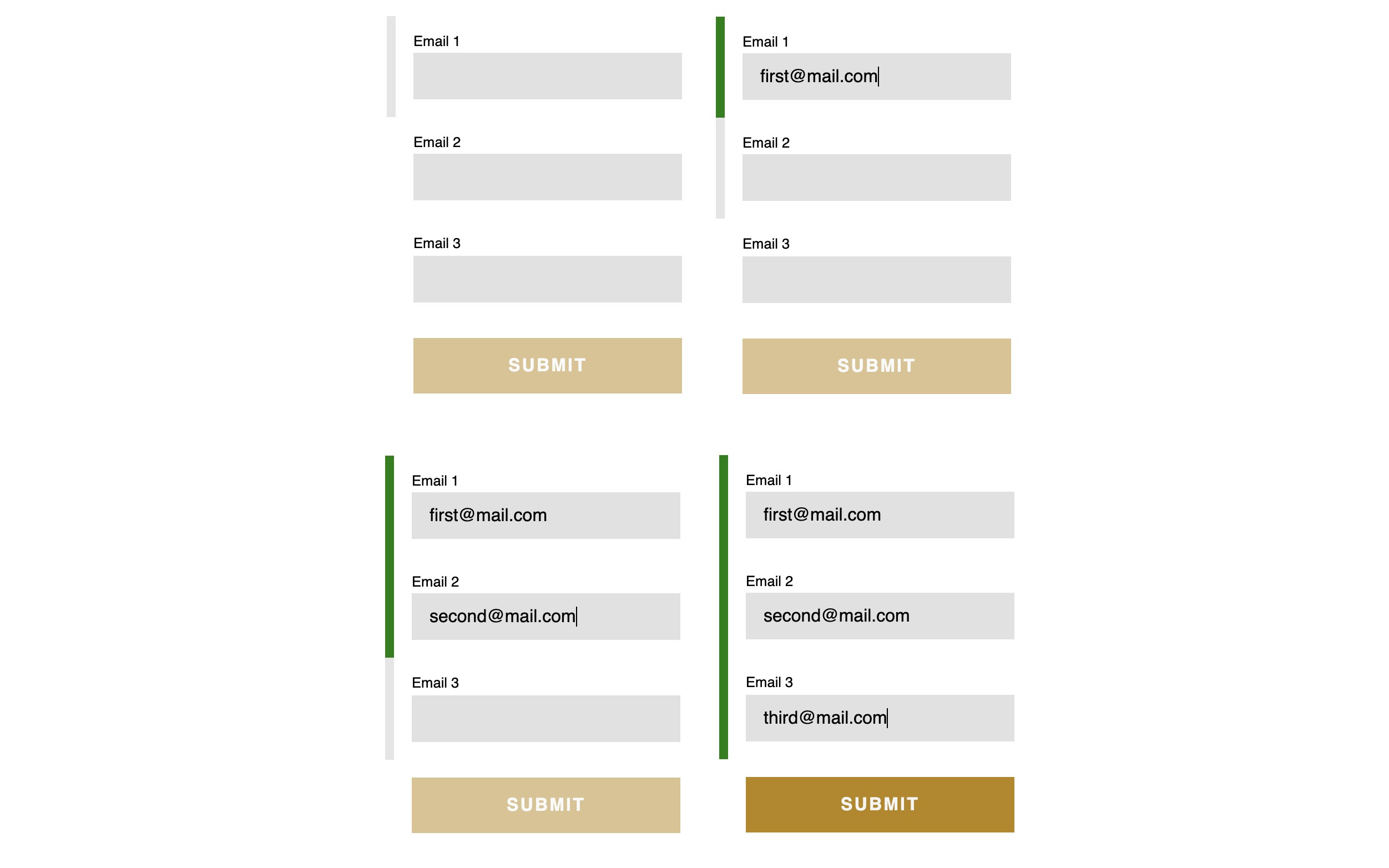

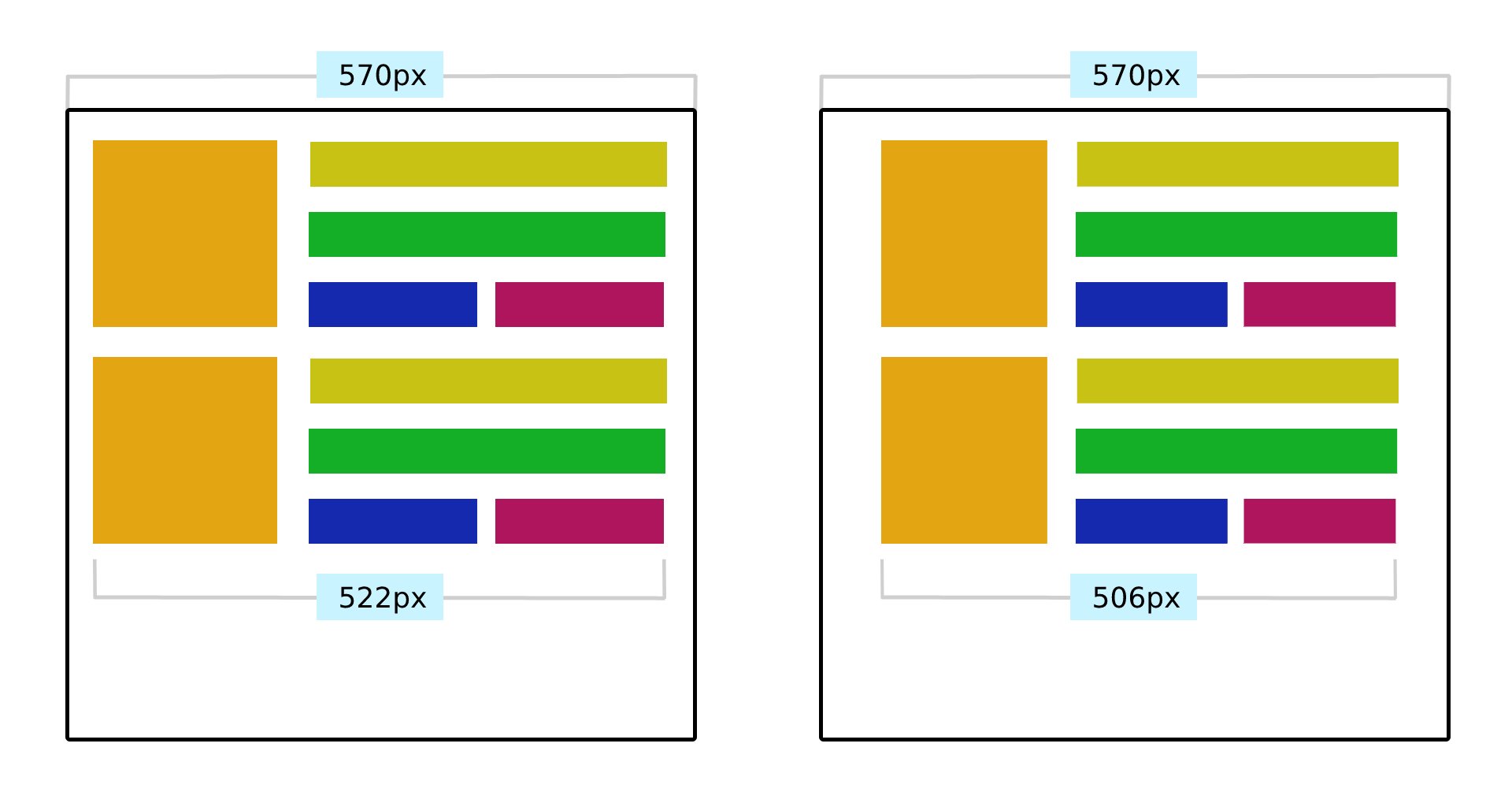

Those diagrams illustrate (1) the origin page, (2) the destination page, (3) the type of transition, and (4) the transition elements. The following is a closer look at the transition elements, i.e., the elements that receive the transition and are tracked by the API.

So, what we’re working with are two transition elements: a header and a card component. We will configure those together one at a time.

Header Transition Elements

The default crossfade transition between the pages has already been set, so let’s start by registering the header as a transition element by assigning it a view-transition-name. First, let’s take a peek at the HTML:

<div class="header__wrapper">

<!-- Link back arrow -->

<a class="header__link header__link--dynamic" href="/">

<svg ...><!-- ... --></svg>

</a>

<!-- Page title -->

<h1 class="header__title">

<a href="/" class="header__link-logo">

<span class="header__logo--deco">Vinyl</span>Emporium </a>

</h1>

<!-- ... -->

</div>

When the user navigates between the homepage and an item details page, the arrow in the header appears and disappears — depending on which direction we’re moving — while the title moves slightly to the right. We can use display: none to handle the visibility.

/* Hide back arrow on the homepage */

.home .header__link--dynamic {

display: none;

}

We’re actually registering two transition elements within the header: the arrow (.header__link--dynamic) and the title (.header__title). We use the view-transition-name property on both of them to define the names we want to call those elements in the transition:

@supports (view-transition-name: none) {

.header__link--dynamic {

view-transition-name: header-link;

}

.header__title {

view-transition-name: header-title;

}

}

Note how we’re wrapping all of this in a CSS @supports query so it is scoped to browsers that actually support the View Transitions API. So far, so good!

To do that, let’s start by defining our transition elements and assign transition names to the elements we’re transitioning between the product image (.product__image--deco) and the product disc behind the image (.product__media::before).

@supports (view-transition-name: none) {

.product__image--deco {

view-transition-name: product-lp;

}

.product__media::before {

view-transition-name: flap;

}

::view-transition-group(product-lp) {

animation-duration: 0.25s;

animation-timing-function: ease-in;

}

::view-transition-old(product-lp),

::view-transition-new(product-lp) {

/* Removed the crossfade animation */

mix-blend-mode: normal;

animation: none;

}

}

Notice how we had to remove the crossfade animation from the product image’s old (::view-transition-old(product-lp)) and new (::view-transition-new(product-lp)) states. So, for now, at least, the album disc changes instantly the moment it’s positioned back behind the album image.

But doing this messed up the transition between our global header navigation and product details pages. Navigating from the item details page back to the homepage results in the album disc remaining visible until the view transition finishes rather than running when we need it to.

Let’s configure the router to match that structure. Each route gets a loader function to handle page data.

import { createBrowserRouter, RouterProvider } from "react-router-dom";

import Category, { loader as categoryLoader } from "./pages/Category";

import Details, { loader as detailsLoader } from "./pages/Details";

import Layout from "./components/Layout";

/* Other imports */

const router = createBrowserRouter([

{

/* Shared layout for all routes */

element: <Layout />,

children: [

{

/* Homepage is going to load a default (first) category */

path: "/",

element: <Category />,

loader: categoryLoader,

},

{

/* Other categories */

path: "/:category",

element: <Category />,

loader: categoryLoader,

},

{

/* Item details page */

path: "/:category/product/:slug",

element: <Details />,

loader: detailsLoader,

},

],

},

]);

const root = ReactDOM.createRoot(document.getElementById("root"));

root.render(

<React.StrictMode>

<RouterProvider router={router} />

</React.StrictMode>

);

With this, we have established the routing structure for the app:

- Homepage (

/); - Category page (

/:category); - Product details page (

/:category/product/:slug).

And depending on which route we are on, the app renders a Layout component. That’s all we need as far as setting up the routes that we’ll use to transition between views. Now, we can start working on our first transition: between two category pages.

Transition Between Category Pages

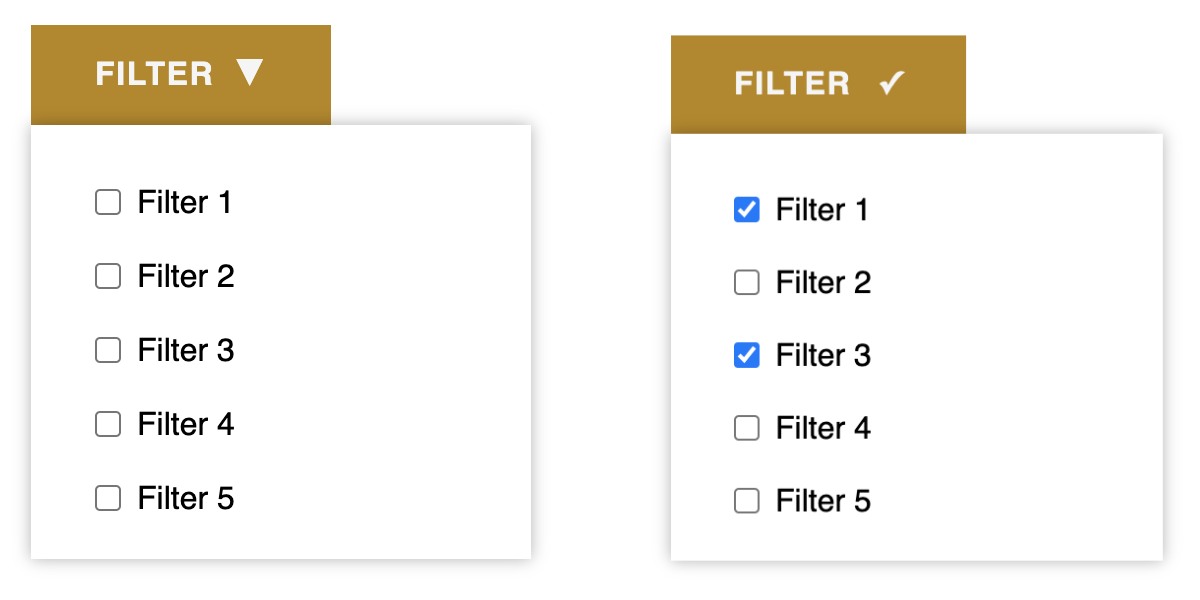

We’ll start by implementing the transition between category pages. The transition performs a crossfade animation between views. The only part of the UI that does not participate in the transition is the bottom border of the category filter menu, which provides a visual indication for the active category filter and moves between the formerly active category filter and the currently active category filter that we will eventually register as a transition element.

Since we’re using react-router, we get its web-based routing solution, react-router-dom, baked right in, giving us access to the DOM bindings — or router components we need to keep the UI in sync with the current route as well as a component for navigational links. That’s also where we gain access to the View Transitions API implementation.

Specifically, we will use the component for navigation links (Link) with the unstable_viewTransition prop that tells the react-router to run the View Transitions API when switching page contents.

import { Link, useLocation } from "react-router-dom";

/* Other imports */

const NavLink = ({ slug, title, id }) => {

const { pathname } = useLocation();

/* Check if the current nav link is active */

const isMatch = slug === "/" ? pathname === "/" : pathname.includes(slug);

return (

<li key={id}>

<Link

className={isMatch ? "nav__link nav__link--current" : "nav__link"}

to={slug}

unstable_viewTransition

>

{title}

</Link>

</li>

);

};

const Nav = () => {

return

<nav className={"nav"}>

<ul className="nav__list">

{categories.items.map((item) => (

<NavLink {...item} />

))}

</ul>

</nav>

);

};

That is literally all we need to register and run the default crossfading view transition! That’s again because react-router-dom is giving us access to the View Transitions API and does the heavy lifting to abstract the process of setting transitions on elements and views.

Creating The Transition Elements

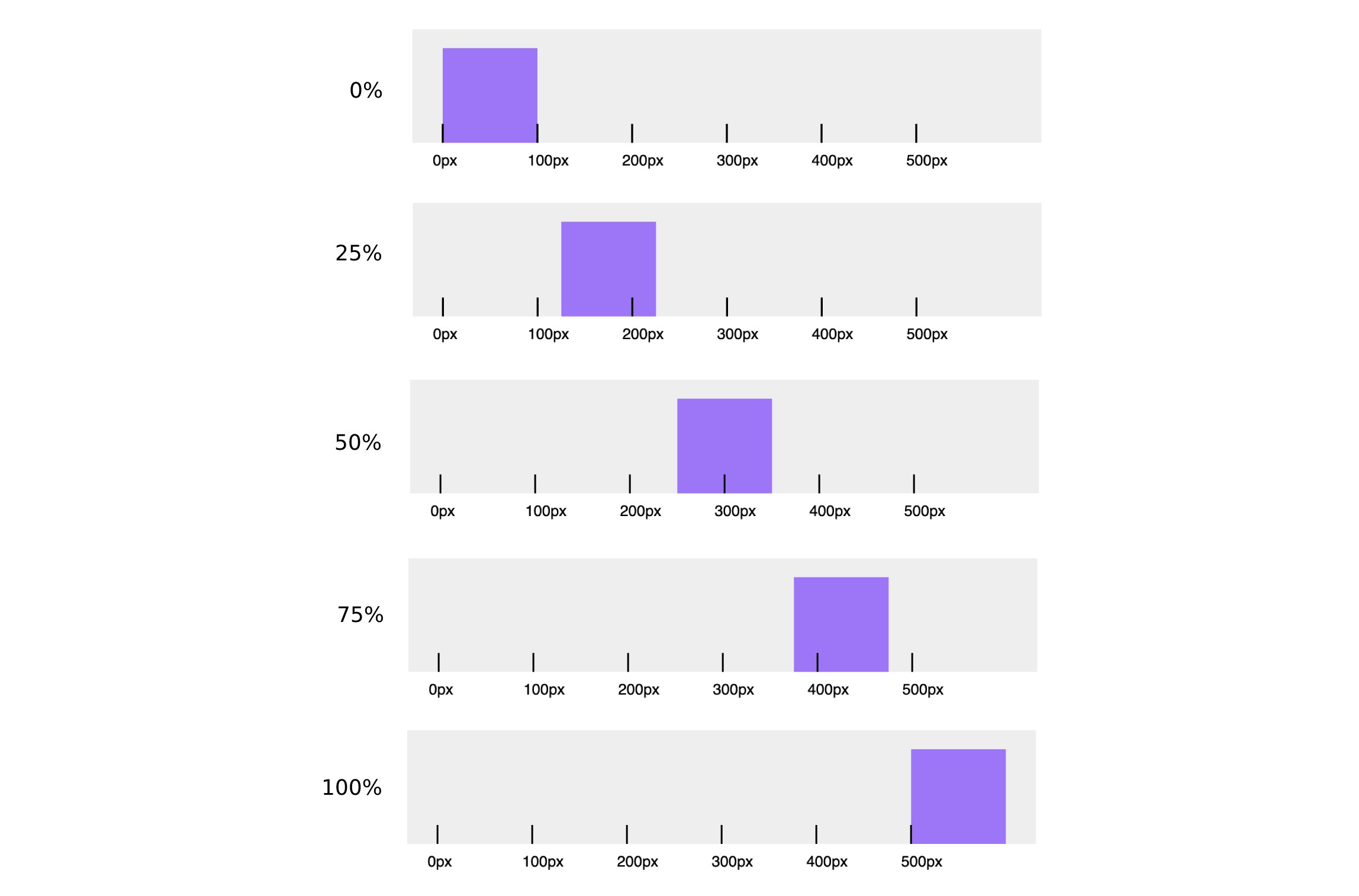

We only have one UI element that gets its own transition and a name for it, and that’s the visual indicator for the actively selected product category filter in the app’s navigation. While the app transitions between category views, it runs another transition on the active indicator that moves its position from the origin category to the destination category.

I know that I had earlier described that visual indicator as a bottom border, but we’re actually going to establish it as a standard HTML horizontal rule (<hr>) element and conditionally render it depending on the current route. So, basically, the <hr> element is fully removed from the DOM when a view transition is triggered, and we re-render it in the DOM under whatever NavLink component represents the current route.

We want this transition only to run if the navigation is visible, so we’ll use the react-intersection-observer helper to check if the element is visible and, if it is, assign it a viewTransitionName in an inline style.

import { useInView } from "react-intersection-observer";

/* Other imports */

const NavLink = ({ slug, title, id }) => {

const { pathname } = useLocation();

const isMatch = slug === "/" ? pathname === "/" : pathname.includes(slug);

return (

<li key={id}>

<Link

ref={ref}

className={isMatch ? "nav__link nav__link--current" : "nav__link"}

to={slug}

unstable_viewTransition

>

{title}

</Link>

{isMatch && (

<hr

style={{

viewTransitionName: inView ? "marker" : "",

}}

className="nav__marker"

/>

)}

</li>

);

};

First, let’s take a look at our Card component used in the category views. Once again, react-router-dom makes our job relatively easy, thanks to the unstable_useViewTransitionState hook. The hook accepts a URL string and returns true if there is an active page transition to the target URL, as well as if the transition is using the View Transitions API.

That’s how we’ll make sure that our active image remains a transition element when navigating between a category view and a product view.

import { Link, unstable_useViewTransitionState } from "react-router-dom"; /* Other imports */ const Card = ({ author, category, slug, id, title }) => { /* We'll use the same URL value for the Link and the hook */ const url =/${category}/product/${slug}; /* Check if the transition is running for the item details pageURL */ const isTransitioning = unstable_useViewTransitionState(url); return ( <li className="card"><Link unstable_viewTransition to={url} className="card__link"><figure className="card__figure"><imgclassName="card__image"style=}}/* Apply the viewTransitionName if the card has been clicked on */viewTransitionName: isTransitioning ? "item-image" : "",}}src={/assets/${category}/${id}-min.jpg}alt=""/>{/* ... */}</figure><div className="card__deco" /></Link></li>);};export default Card;

We know which image in the product view is the transition element, so we can apply the viewTransitionName directly to it rather than having to guess:

import { Link, useLoaderData, unstable_useViewTransitionState, } from "react-router-dom"; /* Other imports */ const Details = () => { const data = useLoaderData(); const { id, category, title, author } = data; return ( <> <section className="item"> {/* ... */} <article className="item__layout"> <div> <imgstyle={{viewTransitionName: "item-image"}}className="item__image"src={/assets/${category}/${id}-min.jpg}alt=""/></div>{/* ... */}</article></section></>);};export default Details;

We’re on a good track but have two issues that we need to tackle before moving on to the final transitions.

One is that the Card component’s image (.card__image) contains some CSS that applies a fixed one-to-one aspect ratio and centering for maintaining consistent dimensions no matter what image file is used. Once the user clicks on the Card — the .card-image in a category view — it becomes an .item-image in the product view and should transition into its original state, devoid of those extra styles.

/* Card component image */

.card__image {

object-fit: cover;

object-position: 50% 50%;

aspect-ratio: 1;

/* ... */

}

/* Product view image */

.item__image {

/* No aspect-ratio applied */

/* ... */

}

Jake has recommended using React’s flushSync function to make this work. The function forces synchronous and immediate DOM updates inside a given callback. It’s meant to be used sparingly, but it’s okay to use it for running the View Transition API as the target component re-renders.

// Assigns view-transition-name to the image before transition runs

const [isImageTransition, setIsImageTransition] = React.useState(false);

// Applies fixed-positioning and full-width image styles as transition runs

const [isFullImage, setIsFullImage] = React.useState(false);

/* ... */

// State update function, which triggers the DOM update we want to animate

const toggleImageState = () => setIsFullImage((state) => !state);

// Click handler function - toggles both states.

const handleZoom = async () => {

// Run API only if available.

if (document.startViewTransition) {

// Set image as a transition element.

setIsImageTransition(true);

const transition = document.startViewTransition(() => {

// Apply DOM updates and force immediate re-render while.

// View Transitions API is running.

flushSync(toggleImageState);

});

await transition.finished;

// Cleanup

setIsImageTransition(false);

} else {

// Fallback

toggleImageState();

}

};

/* ... */

With this in place, all we really have to do now is toggle class names and view transition names depending on the state we defined in the previous code.

import React from "react"; import { flushSync } from "react-dom"; /* Other imports */ const Details = () => { /* React state, click handlers, util functions... */ return ( <> <section className="item"> {/* ... */} <article className="item__layout"> <div><buttononClick={handleZoom}className="item__toggle"><imgstyle={{viewTransitionName:isTransitioning || isImageTransition ? "item-image" : "",}}className={isFullImage? "item__image item__image--active": "item__image"}src={/assets/${category}/${id}-min.jpg}alt=""/></button></div>{/* ... */}</article></section><asideclassName={isFullImage ? "item__overlay item__overlay--active" : "item__overlay"}/></>);};

We are applying viewTransitionName directly on the image’s style attribute. We could have used boolean variables to toggle a CSS class and set a view-transition-name in CSS instead. The only reason I went with inline styles is to show both approaches in these examples. You can use whichever approach fits your project!

Let’s round this out by refining styles for the overlay that sits behind the image when it is expanded:

.item__overlay--active {

z-index: 2;

display: block;

background: rgba(0, 0, 0, 0.5);

position: fixed;

top: 0;

left: 0;

width: 100vw;

height: 100vh;

}

.item__image--active {

cursor: zoom-out;

position: absolute;

z-index: 9;

top: 50%;

left: 50%;

transform: translate3d(-50%, -50%, 0);

max-width: calc(100vw - 4rem);

max-height: calc(100vh - 4rem);

}

Demo

The following demonstrates only the code that is directly relevant to the View Transitions API so that it is easier to inspect and use. If you want access to the full code, feel free to get it in this GitHub repo.

ConclusionWe did a lot of work with the View Transitions API in the second half of this brief two-part article series. Together, we implemented full-view transitions in two different contexts, one in a more traditional multi-page application (i.e., website) and another in a single-page application using React.

We started with transitions in a MPA because the process requires fewer dependencies than working with a framework in a SPA. We were able to set the default crossfade transition between two pages — a category page and a product page — and, in the process, we learned how to set view transition names on elements after the transition runs to prevent naming conflicts.

From there, we applied the same concept in a SPA, that is, an application that contains one page but many views. We took a React app for a “Museum of Digital Wonders” and applied transitions between full views, such as navigating between a category view and a product view. We got to see how react-router — and, by extension, react-router-dom — is used to define transitions bound to specific routes. We used it not only to set a crossfade transition between category views and between category and product views but also to set a view transition name on UI elements that also transition in the process.

The View Transitions API is powerful, and I hope you see that after reading this series and following along with the examples we covered together. What used to take a hefty amount of JavaScript is now a somewhat trivial task, and the result is a smoother user experience that irons out the process of moving from one page or view to another.

That said, the View Transitions API’s power and simplicity need the same level of care and consideration for accessibility as any other transition or animation on the web. That includes things like being mindful of user motion preferences and resisting the temptation to put transitions on everything. There’s a fine balance that comes with making accessible interfaces, and motion is certainly included.

References

- CSS View Transitions Module Level 1 Specification (W3C)

- View Transitions API Explainer (GitHub repo)

- View Transitions API (MDN)

- “Smooth And Simple Transitions With The View Transitions API,” Jake Archibald