Chris Mills brushes up his shorthand and shows how the MediaStream Recording API in modern browsers can be used to capture audio directly from the user’s device. Inching ever closer to the capabilities of native software, it truly is an exciting time to be a web developer.

The MediaStream Recording API makes it easy to record audio and/or video streams. When used with MediaDevices.getUserMedia(), it provides an easy way to record media from the user’s input devices and instantly use the result in web apps. This article shows how to use these technologies to create a fun dictaphone app.

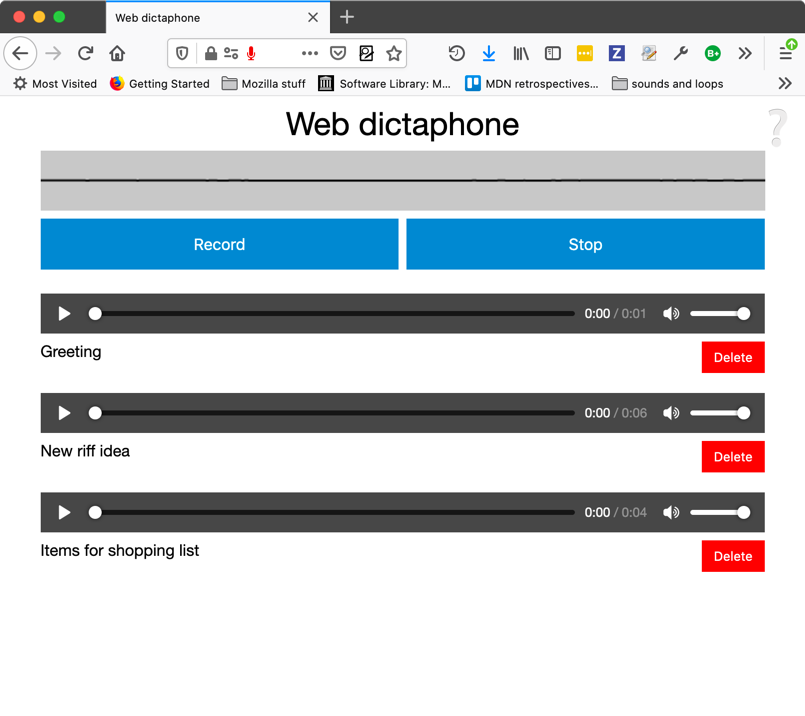

A sample application: Web Dictaphone

To demonstrate basic usage of the MediaRecorder API, we have built a web-based dictaphone. It allows you to record snippets of audio and then play them back. It even gives you a visualisation of your device’s sound input, using the Web Audio API. We’ll just concentrate on the recording and playback functionality in this article, for brevity’s sake.

You can see this demo running live, or grab the source code on GitHub. This has pretty good support on modern desktop browsers, but pretty patchy support on mobile browsers currently.

Basic app setup

To grab the media stream we want to capture, we use getUserMedia(). We then use the MediaRecorder API to record the stream, and output each recorded snippet into the source of a generated <audio> element so it can be played back.

We’ll first declare some variables for the record and stop buttons, and the <article> that will contain the generated audio players:

const record = document.querySelector('.record');

const stop = document.querySelector('.stop');

const soundClips = document.querySelector('.sound-clips');Next, we set up the basic getUserMedia structure:

if (navigator.mediaDevices && navigator.mediaDevices.getUserMedia) {

console.log('getUserMedia supported.');

navigator.mediaDevices.getUserMedia (

// constraints - only audio needed for this app

{

audio: true

})

// Success callback

.then(function(stream) {

})

// Error callback

.catch(function(err) {

console.log('The following `getUserMedia` error occured: ' + err);

}

);

} else {

console.log('getUserMedia not supported on your browser!');

}The whole thing is wrapped in a test that checks whether getUserMedia is supported before running anything else. Next, we call getUserMedia() and inside it define:

- The constraints: Only audio is to be captured for our dictaphone.

- The success callback: This code is run once the

getUserMediacall has been completed successfully. - The error/failure callback: The code is run if the

getUserMediacall fails for whatever reason.

Note: All of the code below is found inside the getUserMedia success callback in the finished version.

Capturing the media stream

Once getUserMedia has created a media stream successfully, you create a new Media Recorder instance with the MediaRecorder() constructor and pass it the stream directly. This is your entry point into using the MediaRecorder API — the stream is now ready to be captured into a <Blob>, in the default encoding format of your browser.

const mediaRecorder = new MediaRecorder(stream);There are a series of methods available in the MediaRecorder interface that allow you to control recording of the media stream; in Web Dictaphone we just make use of two, and listen to some events. First of all, MediaRecorder.start() is used to start recording the stream once the record button is pressed:

record.onclick = function() {

mediaRecorder.start();

console.log(mediaRecorder.state);

console.log("recorder started");

record.style.background = "red";

record.style.color = "black";

}When the MediaRecorder is recording, the MediaRecorder.state property will return a value of “recording”.

As recording progresses, we need to collect the audio data. We register an event handler to do this using mediaRecorder.ondataavailable:

let chunks = [];

mediaRecorder.ondataavailable = function(e) {

chunks.push(e.data);

}Last, we use the MediaRecorder.stop() method to stop the recording when the stop button is pressed, and finalize the Blob ready for use somewhere else in our application.

stop.onclick = function() {

mediaRecorder.stop();

console.log(mediaRecorder.state);

console.log("recorder stopped");

record.style.background = "";

record.style.color = "";

}Note that the recording may also stop naturally if the media stream ends (e.g. if you were grabbing a song track and the track ended, or the user stopped sharing their microphone).

Grabbing and using the blob

When recording has stopped, the state property returns a value of “inactive”, and a stop event is fired. We register an event handler for this using mediaRecorder.onstop, and construct our blob there from all the chunks we have received:

mediaRecorder.onstop = function(e) {

console.log("recorder stopped");

const clipName = prompt('Enter a name for your sound clip');

const clipContainer = document.createElement('article');

const clipLabel = document.createElement('p');

const audio = document.createElement('audio');

const deleteButton = document.createElement('button');

clipContainer.classList.add('clip');

audio.setAttribute('controls', '');

deleteButton.innerHTML = "Delete";

clipLabel.innerHTML = clipName;

clipContainer.appendChild(audio);

clipContainer.appendChild(clipLabel);

clipContainer.appendChild(deleteButton);

soundClips.appendChild(clipContainer);

const blob = new Blob(chunks, { 'type' : 'audio/ogg; codecs=opus' });

chunks = [];

const audioURL = window.URL.createObjectURL(blob);

audio.src = audioURL;

deleteButton.onclick = function(e) {

let evtTgt = e.target;

evtTgt.parentNode.parentNode.removeChild(evtTgt.parentNode);

}

}Let’s go through the above code and look at what’s happening.

First, we display a prompt asking the user to name their clip.

Next, we create an HTML structure like the following, inserting it into our clip container, which is an <article> element.

<article class="clip">

<audio controls></audio>

<p>_your clip name_</p>

<button>Delete</button>

</article>After that, we create a combined Blob out of the recorded audio chunks, and create an object URL pointing to it, using window.URL.createObjectURL(blob). We then set the value of the <audio> element’s src attribute to the object URL, so that when the play button is pressed on the audio player, it will play the Blob.

Finally, we set an onclick handler on the delete button to be a function that deletes the whole clip HTML structure.

So that’s basically it — we have a rough and ready dictaphone. Have fun recording those Christmas jingles! As a reminder, you can find the source code, and see it running live, on the MDN GitHub.

This article is based on Using the MediaStream Recording API by Mozilla Contributors, and is licensed under CC-BY-SA 2.5.

About the author

Chris Mills manages the MDN web docs writers’ team at Mozilla, which involves spreadsheets, meetings, writing docs and demos about open web technologies, and occasional tech talks at conferences and universities. He used to work for Opera and W3C, and enjoys playing heavy metal drums and drinking good beer.