File this under stuff you don’t need to know just yet, but I think the :has CSS selector is going to have a big impact on how we write CSS in the future. In fact, if it ever ships in browsers, I think it breaks my mental model for how CSS fundamentally works because it would be the first example of a parent selector in CSS.

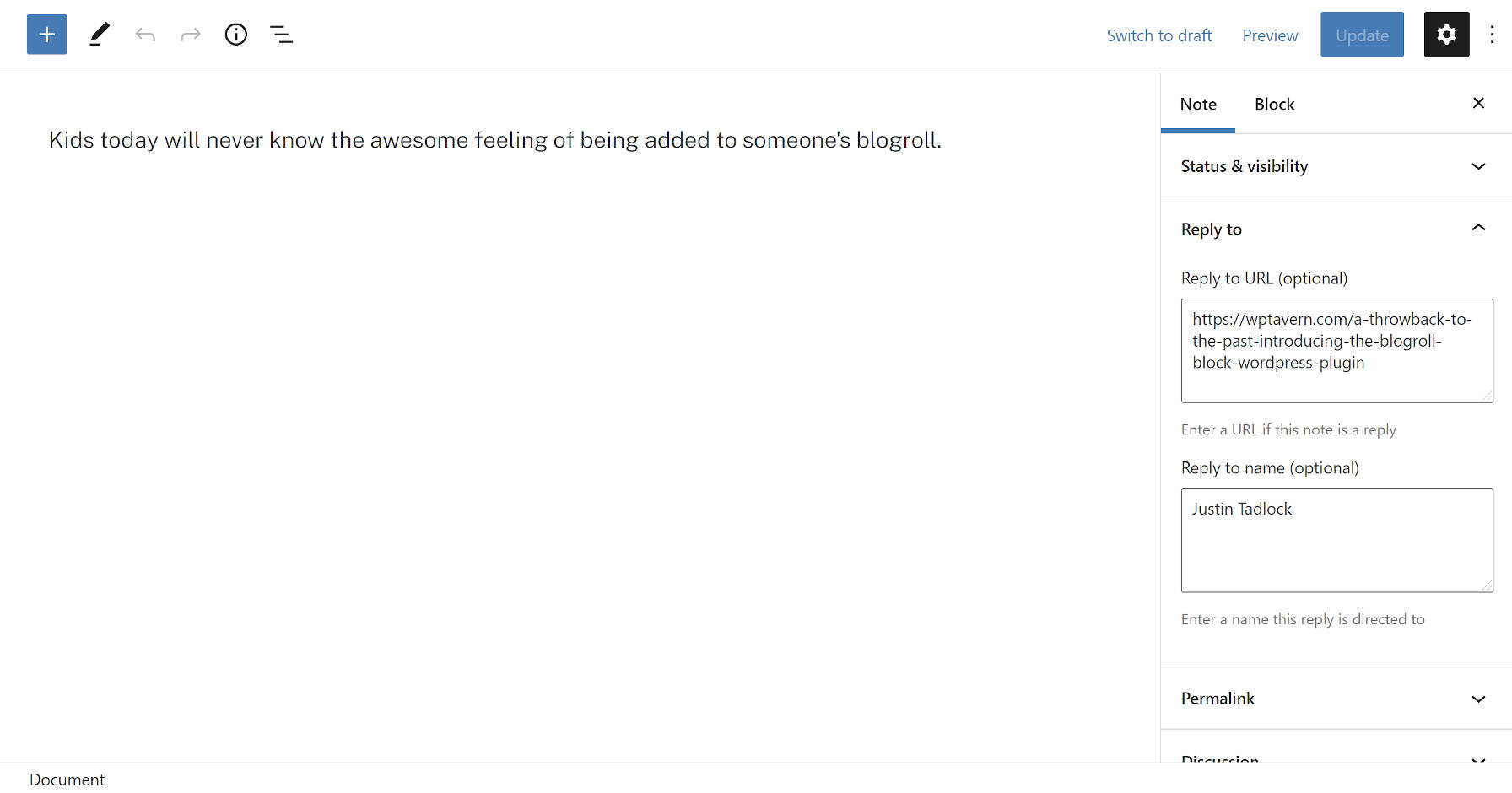

Before I explain all that, let’s look at an example:

div:has(p) {

background: red;

}Although it’s not supported in any browser today, this line of CSS would change the background of a div only if it has a paragraph within it. So, if there’s a div with no paragraphs in it, then these styles would not apply.

That’s pretty handy and yet exceptionally weird, right? Here’s another example:

div:has(+ div) {

color: blue;

}This CSS would only apply to any div that directly has another div following it.

The way I think about :has is this: it’s a parent selector pseudo-class. That is CSS-speak for “it lets you change the parent element if it has a child or another element that follows it.” This is so utterly strange to me because it breaks with my mental model of how CSS works. This is how I’m used to thinking about CSS:

/* Not valid CSS, just an illustration */

.parent {

.child {

color: red;

}

}You can only style down, from parent to child, but never back up the tree. :has completely changes this because up until now there have been no parent selectors in CSS and there are some good reasons why. Because of the way in which browsers parse HTML and CSS, selecting the parent if certain conditions are met could lead to all sorts of performance concerns.

Putting those concerns aside though, if I just sit down and think about all the ways I might use :has today then I sort of get a headache. It would open up this pandora’s box of opportunities that have never been possible with CSS alone.

Okay, one last example: let’s say we want to only apply styles to links that have images in them:

a:has(> img) {

border: 20px solid white;

}This would be helpful from time to time. I can also see :has being used for conditionally adding margin and padding to elements depending on their content. That would be neat.

Although :has isn’t supported in browsers right now (probably for those performance reasons), it is part of the CSS Selectors Level 4 specification which is the same spec that has the extremely useful :not pseudo-class. Unlike :has, :not does have pretty decent browser support and I used it for the first time the other day:

ul li:not(:first-of-type) {

color: red;

}That’s great I also love how gosh darn readable it is; you don’t ever have to have seen this line of code to understand what it does.

Another way you can use :not is for margins:

ul li:not(:last-of-type) {

margin-bottom: 20px;

}So every element that is not the last item gets a margin. This is useful if you have a bunch of elements in a card, like this:

CSS Selectors Level 4 is also the same spec that has the :is selector that can be used like this today in a lot of browsers:

:is(section, article, aside, nav) :is(h1, h2, h3, h4, h5, h6) {

color: #BADA55;

}

/* ... which would be the equivalent of: */

section h1, section h2, section h3, section h4, section h5, section h6,

article h1, article h2, article h3, article h4, article h5, article h6,

aside h1, aside h2, aside h3, aside h4, aside h5, aside h6,

nav h1, nav h2, nav h3, nav h4, nav h5, nav h6 {

color: #BADA55;

}So that’s it! :has might not be useful today but its cousins :is and :not can be fabulously helpful already and that’s only a tiny glimpse — just three CSS pseudo-classes — that are available in this new spec.

The post Did You Know About the :has CSS Selector? appeared first on CSS-Tricks.

You can support CSS-Tricks by being an MVP Supporter.

No matter how successfully you market your e-commerce business, an inability to handle inventory can become a roadblock in your way to making efficient supply decisions. Your inventory management process becomes largely inefficient when you don’t have a grip on your inventory or keep a track of it with unorganized spreadsheets. To avoid inventory cash […]

No matter how successfully you market your e-commerce business, an inability to handle inventory can become a roadblock in your way to making efficient supply decisions. Your inventory management process becomes largely inefficient when you don’t have a grip on your inventory or keep a track of it with unorganized spreadsheets. To avoid inventory cash […]