The irony doesn‘t escape me that I’m currently writing a how-to guide on … how-to guides.

But I've had my fair share of experiences writing them for HubSpot, like How to Give a Persuasive Presentation, How to Develop a Content Strategy: A Start-to-Finish Guide, and How to Write a Request for Proposal. So, it came naturally.

![Download Now: 150+ Content Creation Templates [Free Kit]](https://no-cache.hubspot.com/cta/default/53/5478fa12-4cc3-4140-ba96-bc103eeb873e.png)

If you’ve never written a how-to guide, however, they can be pretty intimidating. They’re meant to be tactical and usually must be very detailed and specific to be effective. But this doesn’t mean they have to be hard to create.

In this article, we‘ll explore how to structure and write a comprehensive how-to guide. We’ll also look at some impressive examples of how-to guides for inspiration and tips to take yours to the next level. Let's dive in.

Table of Contents

A great how-to guide comes with many benefits for businesses. You can use them internally to train staff and standardize operations or as part of your content marketing. In this article, we’re focusing on the latter.

Why Creating a How-to Guide Is Important

Today, people have more access to information than ever before.

They expect to find everything they need with a few clicks on their smartphones, and businesses are no exception to this expectation.

Here are some of the biggest benefits that come with it for businesses.

Benefits of How-to Guides

They support and empower customers.

People want to be able to do things on their own (make a purchase, set up a product, learn a skill, etc.) without asking for guidance. In fact, 44% of B2B customers opt for self-service channels, according to a report by Heretto.

This is one of the areas a well-crafted how-to guide can help.

How-to guides can help customers troubleshoot issues and answer common questions on their own. For example, I recently found this guide from Apple super helpful when trading in an old iPhone.

By providing these resources, customers don’t have to wait in line for a live chat or phone call or sit in frustration. They can simply visit your website and access the information they need when they need it.

They offer 24/7 access to help.

Your customer service and tech support staff can’t be available 24/7, especially if you’re a small business. Thankfully, how-to guides can provide help even when a person can’t. Customers don’t have to wait around and can solve their issues faster.

They save your support team time.

In my experience, having how-to guides available also helps free up your support team to focus on issues that really require their attention.

Rather than answering simple questions like “How do I set up my new computer?” or “How do I use the automation tool?” they can send along a guide to hopefully help and then move on to solving more difficult, user-specific problems.

Some of these guides can even be used for sales enablement, but that’s another topic to cover. Learn more about it here.

They educate your audience.

How-to guides are also valuable opportunities to reach new audiences with applicable, high-quality content. Consider how many people search “How to…” on Google each day:

These search queries alone demonstrate just how vast the opportunities to reach an audience with “how-tos” is.

If your business can reach your consumers with informative, relevant answers to their questions, those users will begin to see your brand as an authority on the topic.

You’ll offer them valuable knowledge and help build their trust in your brand in the process. Down the road, those readers could become customers and loyal brand advocates who spread the word about your products or services.

Challenges of How-to Guides

Clearly, how-to guides offer brands a wealth of opportunity, but they are not without their challenges. Here are some potential drawbacks to consider.

They need to be detailed and specific.

One glance at the bookshelf by my desk and I have angry flashbacks to how vague and incomplete the assembly instructions were. I’m sure many of us have had similar experiences, so don’t let your brand be the one to cause them.

When creating a how-to guide, be as specific and detailed as possible. Include visual descriptions or, better yet, images or examples for people to follow. You may even want to opt for a video over text, depending on the topic.

The more precise you can be with your instructions in a how-to guide, the more effective it will likely be. This is all part of having a good customer experience.

They can be time-consuming to create.

Because great how-to guides need to be so thorough, they can be extremely time-consuming to create. If your team is going to create one make sure they allocate enough time and resources.

They need to be easily accessible.

A how-to guide is only valuable if it can be easily found and consumed by your audience when they need it. That means you have to publish and promote it effectively.

Will you include a link or QR code in related materials? Will you have a section on your website dedicated to how-to guides? Have a plan in place for how you will get your how-to guides in front of the people who need them.

Popular ways to share your guide include:

They can become dated quickly.

Lastly, how-to guides can quickly become dated, especially if they are related to tech or software. I can’t tell you how many social media how-to guides I’ve written that have become obsolete in months as the apps made updates and best practices evolved.

If you invest in how-to guides, ensure your team is ready to make the necessary updates as needed.

Now that you know what you’re getting into when creating a how-to guide, let’s break down the steps involved.

Note: If you still need assistance, HubSpot’s Guide Creator is here. It’s a new tool providing a quick, easy-to-use solution for creating how-to guides. The Guide Creator is excellent for documenting your business's products, systems, and processes — and it’s free.

1. Identify your topic.

Start with online community forums like Quora or feedback from your community to figure out the top concerns or challenges your target audience might have.

That information will help you determine what content to include in your guide.

For instance, if you're writing “How to Create a Content Marketing Strategy,” for example, you could follow these steps:

- Start by looking at responses to "What is content marketing?" on Quora and other online forums. These user-generated responses can help you identify common themes, misconceptions, or confusion around content marketing.

- Contact your network for common pain points about content marketing. For example, you might find that most of your audience says content marketing is their priority — but they don't know how to do it on a budget.

This research will give you the information you need to create a how-to guide that addresses relevant concerns about your topic. The video below is an example of a how-to guide for content marketing that addresses specific audience pain points.

2. Understand your target audience.

Who’s reading this guide? Are they a new customer or user? Are they a beginner in the subject matter? You audience and what they want to accomplish will inform everything else about your how-to guide, so make sure you fully understand them. It’s their behavior you need to replicate and influence.

Reviewing your buyer persona with your topic in mind is a good place to start.

3. Research your topic thoroughly.

Even if you know a topic incredibly well, do your external research. Understanding a topic well can make writing a how-to guide on the subject more difficult, as you might make assumptions about what to cover. Challenge your bias.

Follow these tips for your research:

- Look at your competitors to see how they write their guides.

- Conduct keyword research to see the words people who want to learn this skill use to search for instructions.

- Use Ahrefs, SEMrush, or another SEO tool to find more similar keywords and queries. This can help you create a well-rounded piece that will answer all your readers' questions and help you rank on Google.

- Seek out expert opinions, popular books, and other resources that can give you details to make your how-to guide stand out.

To illustrate, say you're writing a blog post on “How to Make an Omelette.” Upon researching, you will find Simply Recipe's post at the top of your Google search.

Diving into the post, you'll see Simply Recipe has sections including:

- French Vs. American Omelettes

- The Best Pan for Making Omelettes

- Ideas for Omelette Fillings

If you want your how-to guide on omelettes to be the best, you'll want to cover as much as — if not more — than what Simply Recipe has in its post. This may mean including more omelette types, approaches to making them, or expert tips from well-known chefs.

As you research, remember to fact-check your sources. You want to ensure that your guide is trustworthy and will not cause you legal or other challenges later.

4. Create a step-by-step outline.

Now that you‘ve researched, it’s time to organize your ideas.

If you know the process well, write down all the steps you would take from memory. Then, combine this with any new ideas you learned during research to create a step-by-step outline for your guide.

Remember: Many readers will use your how-to guide as a list of instructions. So, you may need to revise your outline several times to ensure that each step in your strategy is straightforward.

But what do you do if things aren’t so clear-cut?

For example, according to this how-to guide from CNET, there are several four different ways to take a screenshot on a Mac computer.

The proper instructions for the user will depend on their computer and operating system. In situations like this, you need to decide which approach your focus on and make it clear to the reader or be prepared to cover all of the options.

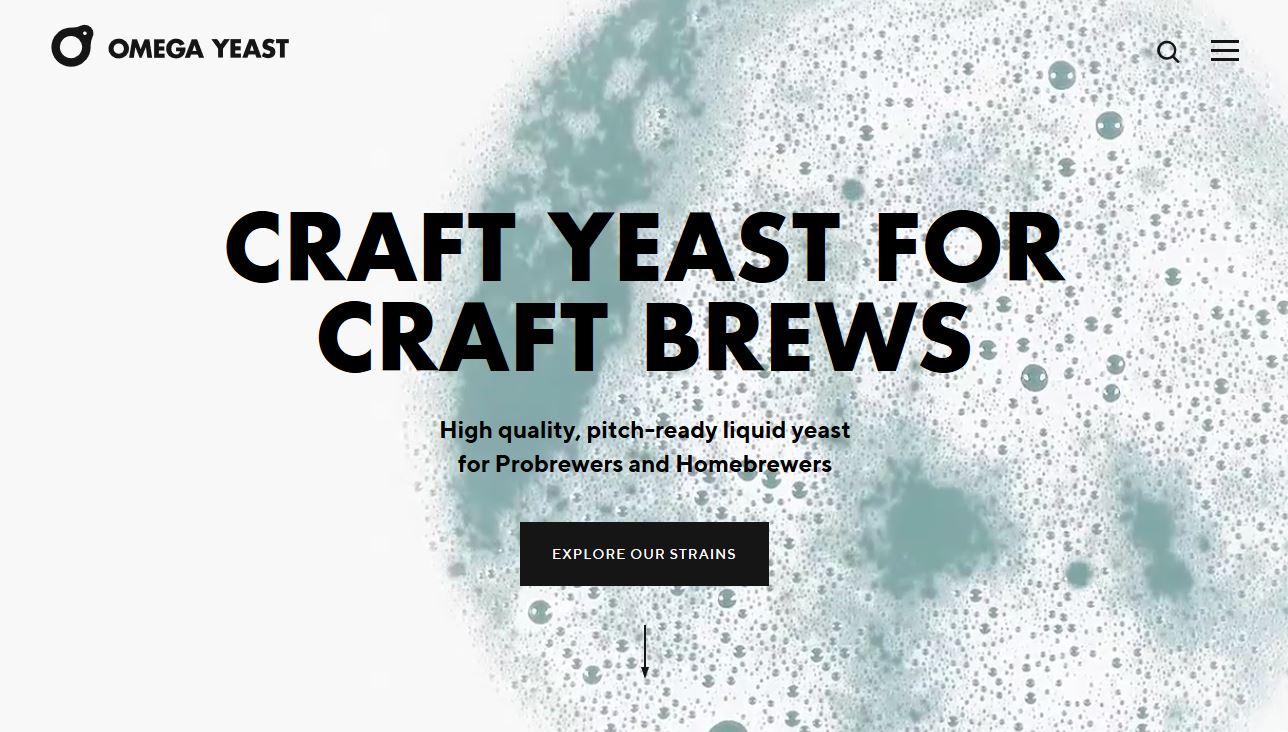

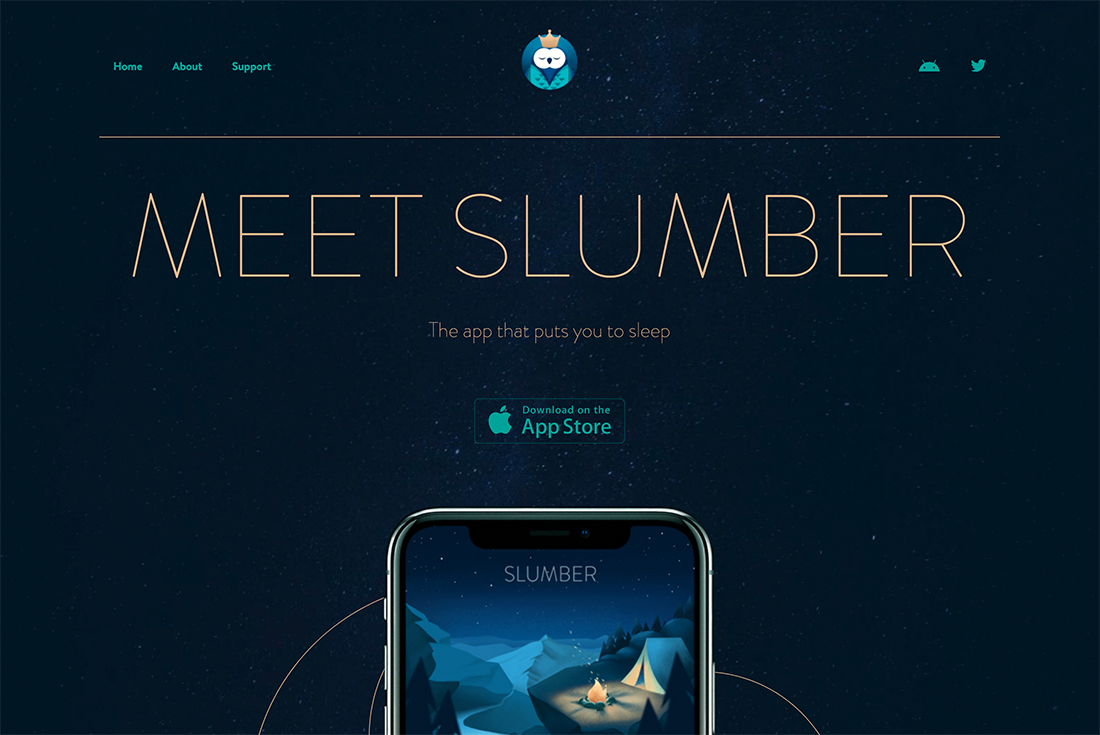

5. Add valuable images, videos, and descriptions.

Use visuals like GIFs, images, screenshots, and videos to supplement your instructions.

The best visuals make your instructions more straightforward and quicker to understand.

For example, in the Great British Bake Off technical competitions, bakers are often asked to bake recipes they’ve never seen before.

If a baker isn’t sure what the final product should look like, they rely on instructions to get it right.

Written instructions can be interpreted differently, and if they get something wrong, they have to start over, which takes up more of their time.

This is an excellent example of what can happen when users can’t picture what they should do. Images and videos can help avoid this confusion.

This is even easier if you opt for a video like Purple does here:

Pro tip: Save screenshots as you go.

You can save yourself a lot of time and trouble by taking screenshots of your steps as you test them out, rather than trying to go back and do it later.

You can save even more time and trouble by automating the process. Tools like HubSpot's free Guide Creator can automatically capture both the steps and the images as you complete the task.

Make sure you include one for each step, and think about what image would most accurately represent that step. Remember that a minor task that may be obvious to you, a seasoned user, may not be obvious to everyone.

6. Review your outline and research from the reader's point of view.

Ask yourself: “Why do my readers need, or want to know, this?”

Understanding the high-level purpose behind a topic can encourage you to write with empathy. Additionally, it will help you create content that accurately meets your reader's expectations and needs.

For instance, when writing "How to Create a Facebook Group for Your Business," I took some time to learn that readers might search this topic if:

- They are seeking out new ways to connect with customers

- They want to create a stronger sense of brand community

- They want to raise awareness about their products or services

As a result, I wrote:

“A group is a good idea if you‘re interested in connecting your customers or leads to one another, you want to facilitate a sense of community surrounding your brand, or you’re hoping to showcase your brand as a thought leader in the industry.

However, a group is not a good idea if you want to use it to raise awareness about your products or services or simply use it to post company announcements.”

In the example above, I targeted a few different segments of readers with diverse purposes to help them determine whether this how-to guide would even help them meet their goals.

Ultimately, understanding the purpose behind your how-to guide is critical for ensuring you target all the various components or angles of the topic.

7. Test the process.

Once you‘ve finished writing your guide, it’s time to test it out. Follow the instructions exactly as written and look for opportunities to add more clarity.

Pro tip: If you can, have someone else test the process out for you. You never know how a word choice or set of instructions will impact another person, so it’s wise to test it with a small group of friends or colleagues.

Clearly state what you’re looking for, expect questions and critical feedback, and connect with a diverse group of people for the most valuable insights.

8. Link to other resources.

It's also a good idea to point readers to other valuable resources if they want to learn more. Link to other relevant blog posts, pillar pages, or ebooks so readers can find follow-up information on topics mentioned in your how-to guide.

They can be by you or from other creators so long as your cite your source. This is another way to build trust and authority with your audience.

How-to Guide Template

If you’re unsure how to structure your how-to guide, here is a simple template you can follow.

Section 1: Overview

This is a summary/introduction to what your guide will cover. Include the purpose of the guide, why the information matters, and what the reader can accomplish after reading it.

You can also use this part of the guide to go into background information like I did with pros and cons.

Section 2: Materials Needed

List any materials or tools that will be needed to complete the process outlined in your guide. They could be digital or tangible.

Section 3: Step-by-Step Instructions

Break down the process into clear, concise steps. Include formatting to make it easy to follow and images or videos to help you explain further.

Optional Section 4: Tips

Know some extra tips, tricks, or hacks that can help someone get the most out of your process? Share them here for added value.

Optional Section 5: Troubleshooting/FAQs

What are some common questions or issues people run into when going through this process? Address them here.

Optional Section 6: Additional Resources

Are there any other educational resources someone going through this guide would find valuable? Share them here.

Section 7: Conclusion

Sum up the guide and reiterate the benefits of following the steps outlined. Use this time to build your audience’s confidence in their ability to act after completing this guide and also give them next steps or a call-to-action, if relevant.

How-to Guide Creator

If the process you’re covering is web-based, HubSpot’s Guide Creator takes all the busy work out of creating guides.

Download for Free

Whether they be for customers, prospects, or colleagues, all you have to do is install the free Chrome extension, click “start capturing,” and go through your process. The tool will automatically take screenshots and document the steps you take.

When you hit “finish capturing,” you will be taken to a page where you can edit the instructions and publish the guide.

Once you're ready to start writing your how-to guide, you might wonder if your tone or style should differ from other kinds of writing.

In short: Yes, it should.

Here are some more tips and best practices to keep in mind when writing a how-to guide:

1. Open with an overview.

A great how-to guide begins with a clear overview or description of what the audience can expect from it. This overview should include:

- A quick summary of your guide

- What your audience will need to use it

- What they'll achieve once they follow the directions

- Why it's worth doing

Pro tip: It's usually easier to write the first paragraph of any piece of content last — including how-to guides. This way, all the details are complete and you just need a few quick attention-grabbing sentences to attract your readers.

Check out this post if you need help writing introductions.

2. Start each step with a verb.

Verbs put your instructions in motion. These active words can help your readers visualize themselves doing the task as you teach them how to do it. They are also direct and require less thought by the reader.

Consider, for example, “Write a company background” versus “Your RFP should start with a brief background on your company.”

The first is very clear about what you need to do. The second takes a little longer to digest.

As you write, you’ll avoid passive verbs like the examples below:

- “Flour and water were stirred together.”

- “The page tab has been opened, so we can click the box at the top.”

Rather, go for active alternatives:

- “Stir together flour and water.”

- “Open the page tab and click the box at the top.”

If you often slip into passive voice as you write, use a grammar-checking tool to catch and fix your errors.

3. Show examples all the way.

You can make your instructions clearer if you include images or examples.

Of course, you want to use sensory details and action to create a picture in their head as you write your how-to guide. But from there, pair images with the written text so your audience doesn’t have to guess or interpret what you meant.

We regularly include examples in articles here at HubSpot. You can even find several in this article.

4. Keep the steps simple and concise.

Simplicity will make your guide easier to understand and execute, but how do you ensure you’re not overcomplicating things?

A good best practice is to think of your process in stages — the beginning, the middle, and the end — and then define the steps within those. This will help you get specific and be wary of combining multiple actions into one step.

Pro tip: Don’t hold back in your first draft. Write out every detail, then go back and scan for extra content that could be distracting and can be removed.

Also, aim to remove extra words. Writing concise copy takes a lot of practice. If your guide is longer than it needs to be and you're not sure how to cut the extra text, these tips can help:

- Cut “the” and “that” when it makes sense

- Limit adverbs and adjectives like very, really, and literally

- Replace three and four-syllable words with shorter alternatives

5. Make your how-to guide easy to skim.

In my experience, many people skim guides. This means they quickly skip text that includes stories, data, or more extended details and look for the actions.

Catering to this habit can create a more delightful user experience.

People tend to pay attention to introductions, summaries, or a paragraph’s first and last sentences, so focus on these areas. Also, use bolded, underlined, or highlighted text to guide their eye to key information.

Numbered lists, headers, and bullet points can also help summarize text, making your guide easier and faster to digest.

6. Write for a specific skill level.

If you're a subject expert, you might combine beginner and advanced terms in your writing without realizing it. This creates inconsistency that can confuse your audience and make your guide tough to understand.

If this concerns you, scan your how-to guide for jargon as you proofread. You can also ask proofreaders from other niches and industries to check that your vocabulary is appropriate for the target audience throughout.

7. Tell an exciting story.

The best how-to guides are more than just practical; they are fun to read. To keep your guide engaging, tell a story. Ensure you have a clear message throughout the guide, add personal experiences, and use conflict to add interest.

For example, say you‘re writing a how-to guide about adding software to a computer. A potential point of conflict could be not having enough disk space to finish installing.

That doesn‘t sound like a big deal, but it can be frustrating if you’ve ever experienced it. If you can paint that picture vividly for your audience, they'll be more likely to follow your directions.

8. Write with empathy.

People who turn to how-to guides are looking for help. So, be sure to acknowledge that you understand where they are coming from. Doing this will help you resonate with your audience and build trust.

You’re saying you know it can be frustrating when learning or refining a new skill and you are there to help.

For example, in the how-to guide "How to Plan Your Facebook Ads Budget (And Make The Biggest Impact),” HubSpot Principal Marketing Manager Ramona Sukhraj immediately connects with her audience, saying, “If budgeting stresses you out, we have a lot in common, my friend.”

9. Stay positive.

Anyone seeking a how-to guide is trying to expand their knowledge about the world. That seems straightforward, but it‘s also a considerable risk.

Many people stop trying new things because they don’t want to look foolish. So, as you write, remember that learning is exciting, but it's often uncomfortable, too.

Keeping your writing positive can help make your readers feel more at ease as they venture into the unknown. Uplifting stories, word choices, and tone can make complex instructions seem more manageable. They can inspire and motivate.

You can also add some positivity by softening negative information. For example, instead of saying, “You‘ll probably fail at this the first time,” try saying something like, “This skill may take some practice before you’re an expert.”

How-to Guide Examples

B2B How-to Guide Examples

“How to Write the Perfect 90-Day Plan”

Why I like this example: This B2B how-to guide offers important contextual details to the 90-day plan, including “What is a 90-day plan?” and “What should be included in a 90-day plan?” The piece is well-researched and written with empathy.

The guide provides a downloadable 90-day plan PDF so readers can immediately use Atlassian's program with their team.

Takeaway: Consider what ebooks, PDFs, charts, Canva designs, or Google Sheets you can create internally and offer to readers to download. Readers will appreciate the option to apply what they've learned immediately.

Why I like this example: Partnering with complementary businesses and services can add depth and perspective to your how-to guide. This example is comprehensive and packed with valuable resources from HubSpot and Brandfolder. It also uses relevant data to highlight key sections.

Takeaway: Look for creative ways to add value to your how-to guide, especially when writing about a topic your audience already knows.

“How To Drive More High-Quality Leads With Google Ads”

Why I like this example: When creating guides for more advanced topics, creating a foundation for your readers is essential.

This guide begins with a detailed introduction to the case that cites current statistics and trends. Then, it covers relevant topics at each stage in the buyer journey. Next, it offers a helpful checklist, links, and resources to implement this learning.

Takeaway: A clear structure makes challenging topics easier to understand. So, consider the ideal start and end points for your expert readers when writing about specific and advanced topics.

B2C How-to Guide Examples

“How to Master Lead Generation in HubSpot”

Why I like this example: This video takes a popular topic relevant to our buyers, lead generation, and delivers all of the foundational knowledge someone needs to get started with it.

It then uses the video format to show viewers exactly how to complete common lead generation tasks like creating landing pages, within the HubSpot tool.

Software tutorials are one of the best use cases for video how-to guides.

“How to Become a Freelancer”

Why I like this example: This guide does an excellent job of providing relevant links and data to create a comprehensive overview of what freelancing is. Additionally, the post uses action verbs to inspire the reader.

Under “How to Start a Freelance Business,” you'll see tips such as “Do Your Homework,” “Create a Brand,” and “Plan Ahead.” The language used in this post goes a long way toward encouraging readers to get started quickly.

Takeaway: Use action verbs and concise language to keep a reader engaged. Start with a verb instead of a noun when listing out steps.

“How to Start a Successful Blog”

Why I like this example: This is an excellent guide for beginners because it includes resources that offer multiple ways to take in the material. This guide is packed with checklists, links to courses, templates, and tools that can help anyone start a blog.

Takeaway: There are many different learning styles. The more choices you give your students to take in the information you share, the more likely they will get value from your guide.

“How to Be a Leader”

Why I like this example: This example offers a personal perspective on leadership that goes beyond typical advice.

It also uses creative headers like “Beyond the Paycheck: What We Wish For,” “Doubtliers: Dangers Learning From the Exceptional,” and “Great companies don’t always make great decisions” to engage the reader in the content.

Takeaway: Teach broad how-to topics from a unique perspective and add interest with relevant stories.

Lifestyle How-to Guide Examples

“11 Ways to Quickly Stop Stress in Your Life”

Why I like this example: I clicked on this post expecting a few quick, easy tips for stopping stress. Instead, I was engrossed in the first section of the post, “The Effects of Stress in Your Life.”

While I previously mentioned the importance of starting with a quick answer to the searcher's how-to question, there are exceptions to that rule. In this case, readers must understand the why before the how.

Takeaway: Play around with structure. Consider what your readers need to know for the rest of the post to matter to them.

For instance, you might start with a section, “What is XYZ?” and “Why XYZ matters” before diving into “How to do XYZ.” This way, your readers are fully invested in discovering how these tips can improve their lives in some small (or big) way.

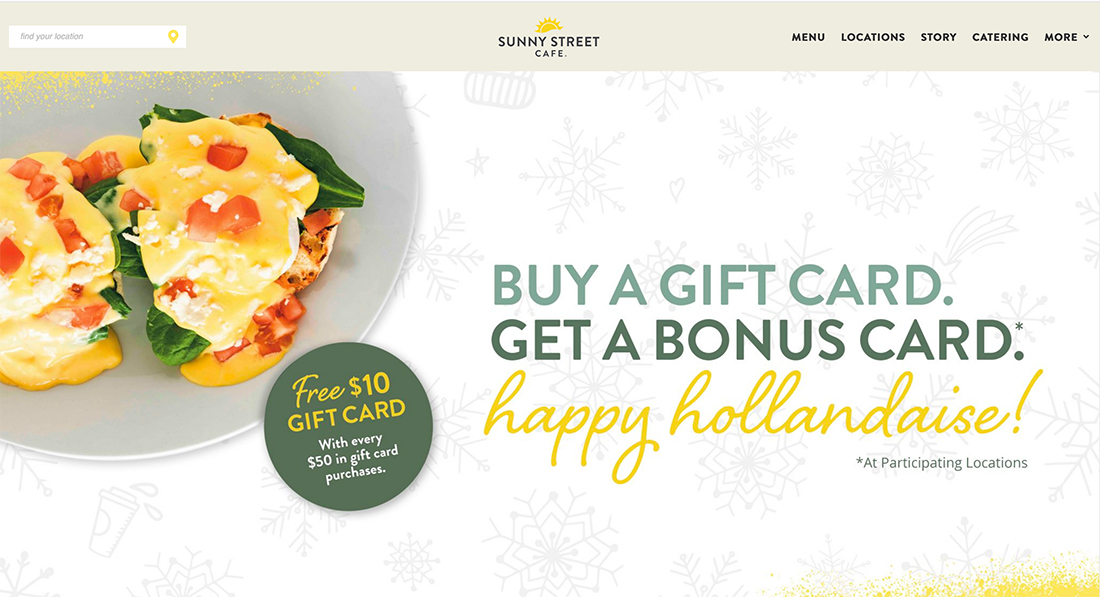

“How To Make Honey Pie”

Why I like this example: This guide is neatly organized so readers can quickly determine a) what makes this recipe unique, b) the ingredients they'll need, and c) how to make it.

If a reader already knows the ingredients necessary for honey pie, they can click “Jump to Recipe” immediately.

Takeaway: As you're structuring your how-to guide, consider best organizing it so readers can jump straight to what they need.

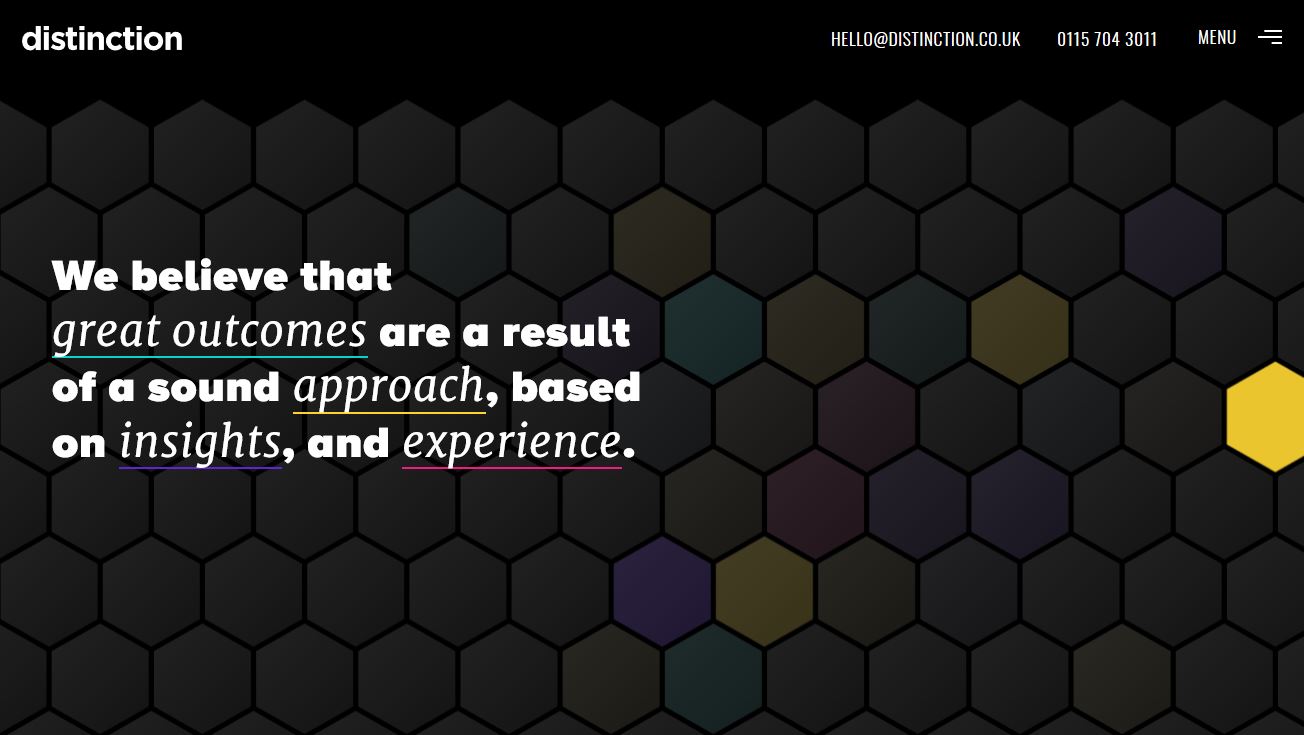

“How to Be More Productive”

Why I like this example: How-to guides are more than written instructions. Useful images, break-out pages highlighting external links, and infographics are potent additions to this example.

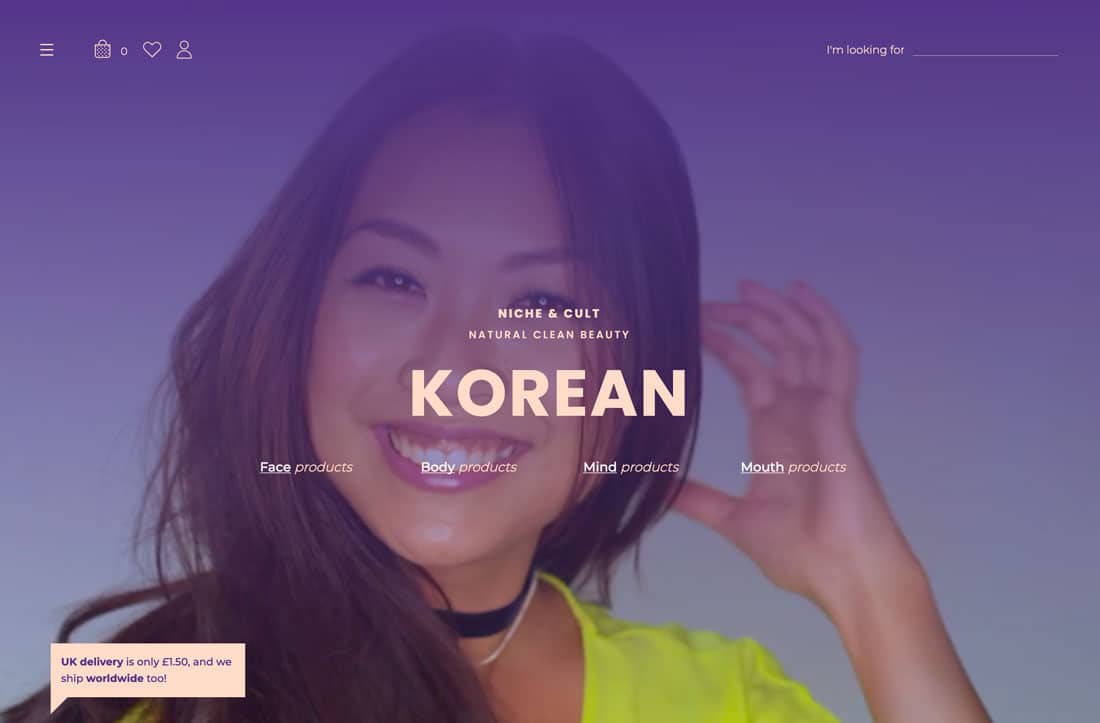

Its outstanding balance of features and white space makes this guide easy on the eyes and quick to skim.

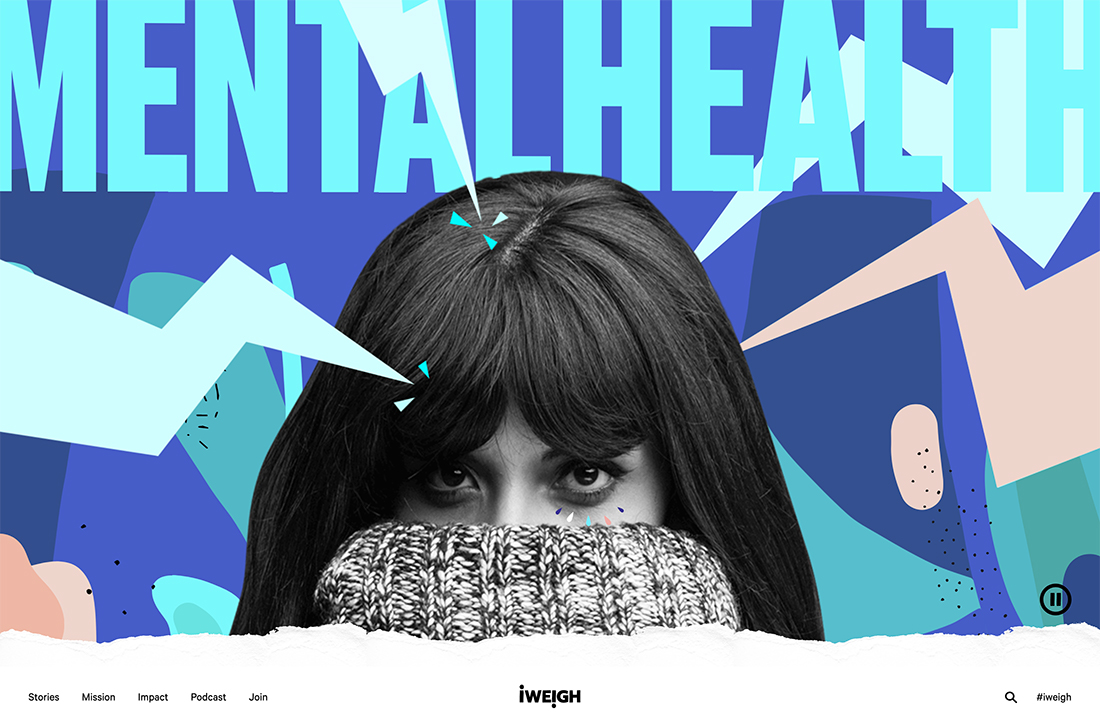

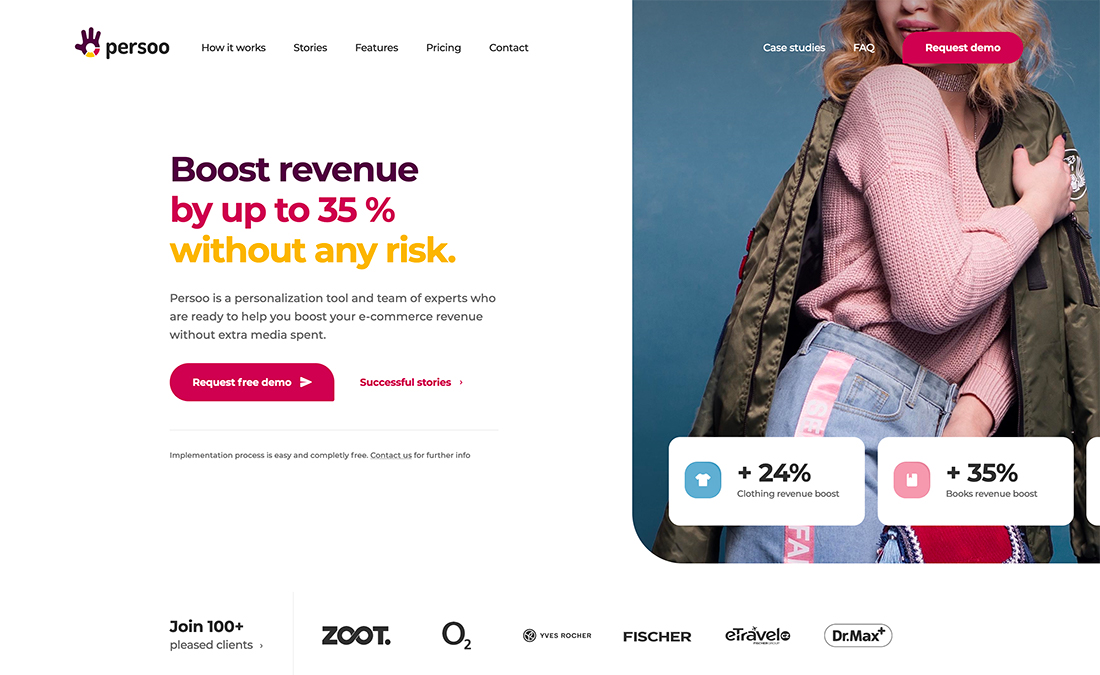

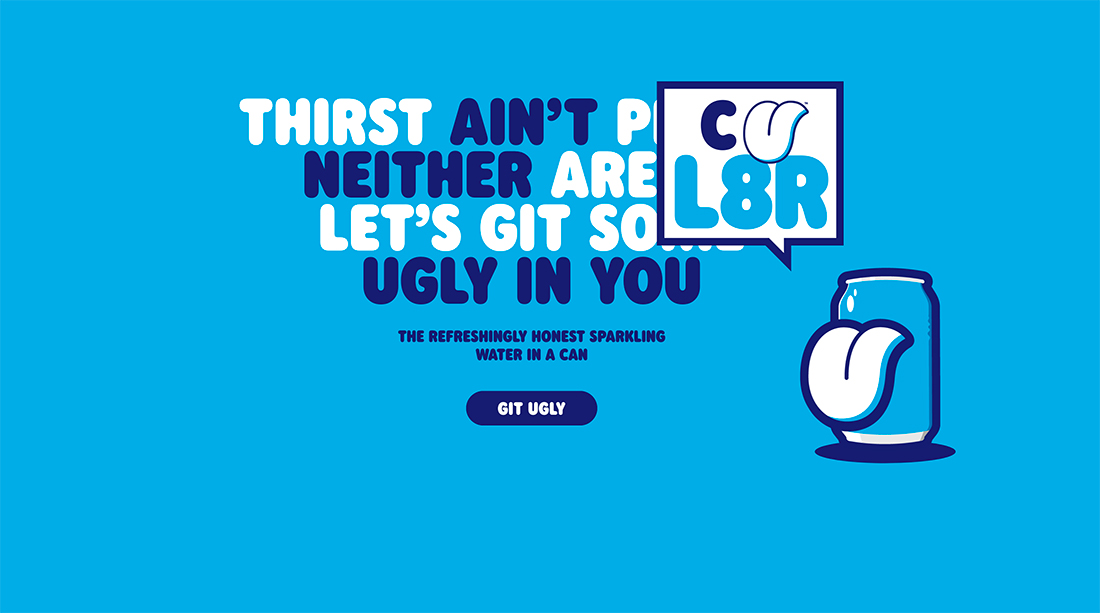

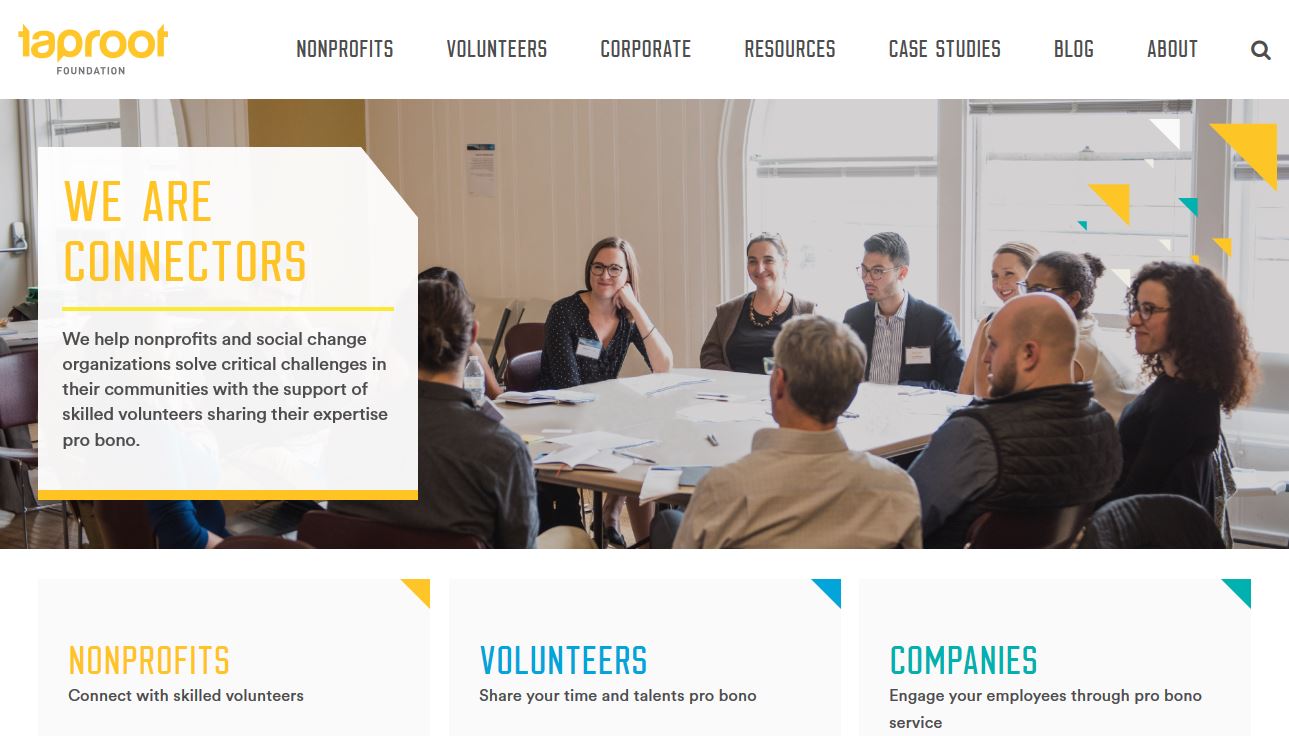

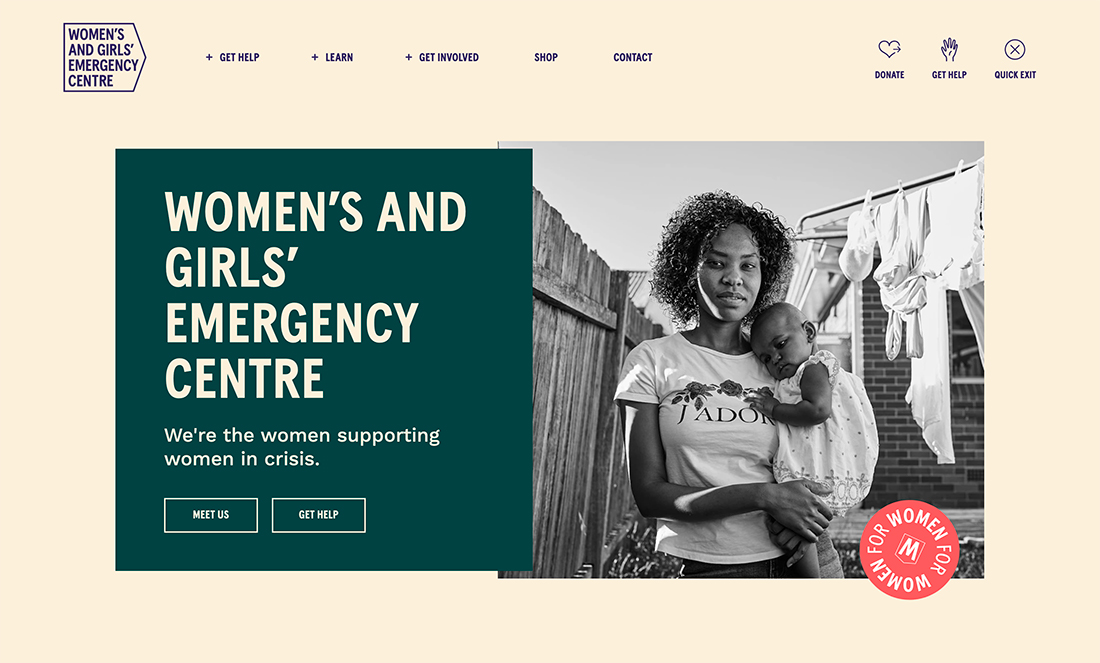

Takeaway: It doesn't matter how great your how-to guide writing is if it needs to be well-designed. Take a look at how-to guide examples to get inspiration for the look and feel of your guide design.

Create a how-to guide worth sharing.

The right how-to guide can change someone's life. It can also significantly boost your business, improve the customer experience, and more.

Creating a great step-by-step guide takes some planning, research, and know-how. Your experience can help someone make a difference; just create a direction that makes your knowledge worth sharing.

Editor's note: This post was originally published in March 2021. It has been updated for freshness and accuracy.

![→ Download Now: SEO Starter Pack [Free Kit]](https://no-cache.hubspot.com/cta/default/53/1d7211ac-7b1b-4405-b940-54b8acedb26e.png)

![Download Now: Negotiate Like a Pro [Free Guide]](https://no-cache.hubspot.com/cta/default/53/a3e6022e-8457-4a1e-8a5f-335ee56050c4.png)

![Download Now: 150+ Content Creation Templates [Free Kit]](https://no-cache.hubspot.com/cta/default/53/5478fa12-4cc3-4140-ba96-bc103eeb873e.png)

![→ Free Download: Social Media Calendar Template [Access Now]](https://no-cache.hubspot.com/cta/default/53/3e56e15d-47bd-46c9-a256-99fde52abfe7.png)