How to figure out the paths, why the styles are not applied, the file is not found, I tried many methods, but as a rule nothing works, it happens that the main page applies styles, in other files there is none or there is no file at all, it is clear that you need to register the paths, but how? I tried $path = $_SERVER['DOCUMENT_ROOT']; this does not work

RAG with LangChain and Hugging Face Serverless Inference API

This article explains how to create a retrieval augmented generation (RAG) chatbot in LangChain using open-source models from Hugging Face serverless inference API.

You will see how to call large language models (LLMs) and embedding models from Hugging Face serverless inference API using LangChain. You will also see how to employ these LLMs and embedding models to create LangChain chatbots with and without memory.

So, let's begin without ado.

We will first install the Python libraries required to run codes in this article.

!pip install langchain

!pip install langchain_community

!pip install pypdf

!pip install faiss-gpu

!pip install langchain-huggingface

!pip install --upgrade --quiet huggingface_hub

The script below imports the required libraries into your Python application.

Since we will be accessing the Hugging Face serverless inference API, you must obtain your access token from Hugging Face.

Note: The codes in this article are run with Google Colab, so I used the user data.get() method to access environment variables. You must use the method that is appropriate for your environment.

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain_community.document_loaders import PyPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_huggingface import HuggingFaceEndpoint, ChatHuggingFace

from langchain_community.embeddings import (

HuggingFaceInferenceAPIEmbeddings,

)

from langchain_community.vectorstores import FAISS

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain.chains import create_retrieval_chain

from langchain_core.documents import Document

from langchain.chains import create_history_aware_retriever

from langchain_core.prompts import MessagesPlaceholder

from langchain_core.messages import HumanMessage, AIMessage

from langchain_core.runnables.history import RunnableWithMessageHistory

from langchain.memory import ChatMessageHistory

import os

from google.colab import userdata

hf_token = userdata.get('HF_API_TOKEN')

Let's first see a basic example of how to call a text generation model from Hugging Face serverless inference API.

You need to create an object of the HuggingFaceEndpoint() class and pass it your model repo ID and Hugging Face access token. The temperature and max_new_tokens variables are optional.

We will use the Qwen 2.5-72b model for response generation.

Next, pass the object of the HuggingFaceEndpoint to the ChatHuggingFace class constructor to create an LLM object.

repo_id = "Qwen/Qwen2.5-72B-Instruct"

llm = HuggingFaceEndpoint(

repo_id=repo_id,

temperature=0.5,

huggingfacehub_api_token=hf_token,

max_new_tokens=1024

)

llm = ChatHuggingFace(llm=llm)

You can use the LLM object defined in the above script like any other LangChain chat LLM model.

Let's see an example. In the following script, we create a prompt using the ChatPromptTemplate object that answers a user's question.

Next, we chain the prompt with the LLM and the output parser object.

Finally, we invoke the chain with a question to generate a final LLM response.

question = "Who won the Cricket World Cup 2019, who was the captain?"

template = """Answer the following question to the best of your knowledge.

Question: {question}

"""

prompt = ChatPromptTemplate.from_template(template)

output_parser = StrOutputParser()

llm_chain = prompt | llm | output_parser

llm_chain.invoke({"question": question})

Output:

The Cricket World Cup 2019 was won by England. The captain of the England team was Eoin Morgan.You can see that once you have created an LLM using HuggingFaceEndpoint and ChatHuggingFace objects, the rest of the stuff is a standard LangChain response generation process.

Let's now create a simple chatbot that answers users' questions about a PDF document.

This section will show how to create a LangChain chatbot using LLMs and word embedding models from Hugging Face serverless API.

We will create a chatbot that answers questions related to Google's 2024 Q1 earnings report.

The following script imports the PDF document and splits it into document chunks.

loader = PyPDFLoader("https://abc.xyz/assets/91/b3/3f9213d14ce3ae27e1038e01a0e0/2024q1-alphabet-earnings-release-pdf.pdf")

docs = loader.load_and_split()

Next, we will create vector embeddings from the PDF document and store them in a Vector store. The user queries will also be converted into vector embeddings.

Finally, the text whose vector embeddings in the vector store match the vector embeddings of the user query will be retrieved to generate the final LLM response.

We will use the all-MiniLM-l6-v2 vector embedding model to generate vector embeddings. You can retrieve this model free from Hugging Face serverless inference API using the HuggingFaceInferenceAPIEmbeddings object.

The following script splits our input PDF document and generates vector embeddings using the all-MiniLM-l6-v2 vector embedding model. The vector embeddings are stored in FAISS vector store.

embeddings = HuggingFaceInferenceAPIEmbeddings(

api_key=hf_token,

model_name="sentence-transformers/all-MiniLM-l6-v2"

)

text_splitter = RecursiveCharacterTextSplitter()

documents = text_splitter.split_documents(docs)

vector = FAISS.from_documents(documents, embeddings)

We are now ready to create our chatbot using LangChain.

We will first create a chatbot without memory. The first step is to create a prompt that uses context to answer user questions.

Next, we will create two chains: a stuff document chain and a retrieval chain. The stuff document chain will link the prompt with the LLM.

The retrieval chain will be responsible for retrieving vector store documents relevant to the user query and passing them to the context variable in the prompt.

prompt = ChatPromptTemplate.from_template("""Answer the following question based only on the provided context:

Question: {input}

Context: {context}

"""

)

document_chain = create_stuff_documents_chain(llm, prompt)

retriever = vector.as_retriever()

retrieval_chain = create_retrieval_chain(retriever, document_chain)

Finally, you can invoke the retrieval chain, ask it questions related to the Google earnings report, and get relevant responses.

For example, in the following script, we ask our chatbot about YouTube's add revenue for the first quarters of 2023 and 2024. The model returns correct responses. You can verify the response from the PDF document.

def generate_response(query):

response = retrieval_chain.invoke({"input": query})

print(response["answer"].rpartition("Assistant:")[-1].strip())

query = "What is the revenue from YouTube adds for the 1st Quarters of 2023 and 2024"

generate_response(query)Output:

Based on the provided context, the revenue from YouTube ads for the first quarter of 2023 was $6,693 million, and for the first quarter of 2024, it was $8,090 million.Next, we will add memory to our chatbot to remember the previous interaction.

To create a LangChain chatbot with memory, we need a history-aware retriever chain in addition to the stuff document and retrieval chain, as explained in the official documentation.

The following script defines the prompt for the history-aware retriever chain. This chain creates a standalone query using the new user query and the chat history. This new standalone query is then passed to the retrieval chain as we did before.

contextualize_q_system_prompt = (

"Given a chat history and the latest user question "

"which might reference context in the chat history, "

"formulate a standalone question which can be understood "

"without the chat history. Do NOT answer the question, "

"just reformulate it if needed and otherwise return it as is."

)

prompt = ChatPromptTemplate.from_messages(

[

("system", contextualize_q_system_prompt),

MessagesPlaceholder("chat_history"),

("user", "{input}"),

]

)

history_retriever_chain = create_history_aware_retriever(llm, retriever, prompt)

The stuff document chain will now have a message placeholder that stores the chat history.

prompt = ChatPromptTemplate.from_messages([

("system", "Answer the user's questions based on the below context:\n\n{context}"),

MessagesPlaceholder(variable_name="chat_history"),

("user", "{input}")

])

document_chain = create_stuff_documents_chain(llm, prompt)

Finally, the retrieval chain will combine the history retriever chain and the stuff document chain to create the final response generation chain.

retrieval_chain = create_retrieval_chain(history_retriever_chain, document_chain)Next, we will initialize an empty list that stores our chat messages. Each call to the retrieval chain extends the chat message history with the user query and the model response.

The script below defines the generate_response_with_memory() function that generates chatbot responses with memory.

chat_history = []

def generate_response_with_memory(query):

response = retrieval_chain.invoke({

"chat_history": chat_history,

"input": query

})

response = response["answer"].rpartition("Assistant:")[-1].strip()

chat_history.extend([HumanMessage(content = query),

AIMessage(content = response)])

return response

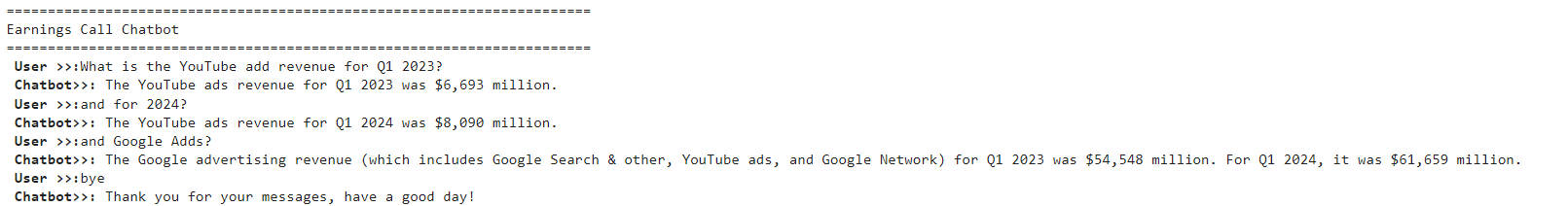

Finally, we can combine everything to create a simple console chatbot that answers user questions related to Google's earnings report. The chatbot will continue answering user questions until the user types' bye. `

print("=======================================================================")

print("Earnings Call Chatbot")

print("=======================================================================")

query = ""

while query != "bye":

query = input("\033[1m User >>:\033[0m")

if query == "bye":

chat_history = []

print("\033[1m Chatbot>>:\033[0m Thank you for your messages, have a good day!")

break

response = generate_response_with_memory(query)

print(f"\033[1m Chatbot>>:\033[0m {response}")

Output:

From the above output, you can see that that model generates responses based on chat history.

In this article, you saw how you can use LangChain and Hugging Face serverless inference API to create a document question-answering.

With Hugging Face's serverless inference API, you can call modern LLMs and embedding models without installing anything locally. This eliminates the need for expensive GPUs and hardware to run advanced LLMS.

I encourage you to use Hugging Face serverless inference API to develop your chatbot and LLM applications.