On July 18th, 2024, OpenAI released GPT-4o mini, their most cost-efficient small model. GPT-4o mini is around 60% cheaper than GPT-3.5 Turbo and around 97% cheaper than GPT-4o. As per OpenAI, GPT-4o mini outperforms GPT-3.5 Turbo on almost all benchmarks while being cheaper.

In this article, we will compare the cost, performance, and latency of GPT-4o mini with GPT-3.5 turbo and GPT-4o. We will perform a zero-shot tweet sentiment classification task to compare the models. By the end of this article, you will find out which of the three models is better for your use cases. So, let's begin without ado.

As a first step, we will install and import the required libraries.

Run the following script to install the OpenAI library.

!pip install openai

The following script imports the required libraries into your application.

import os

import time

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from openai import OpenAI

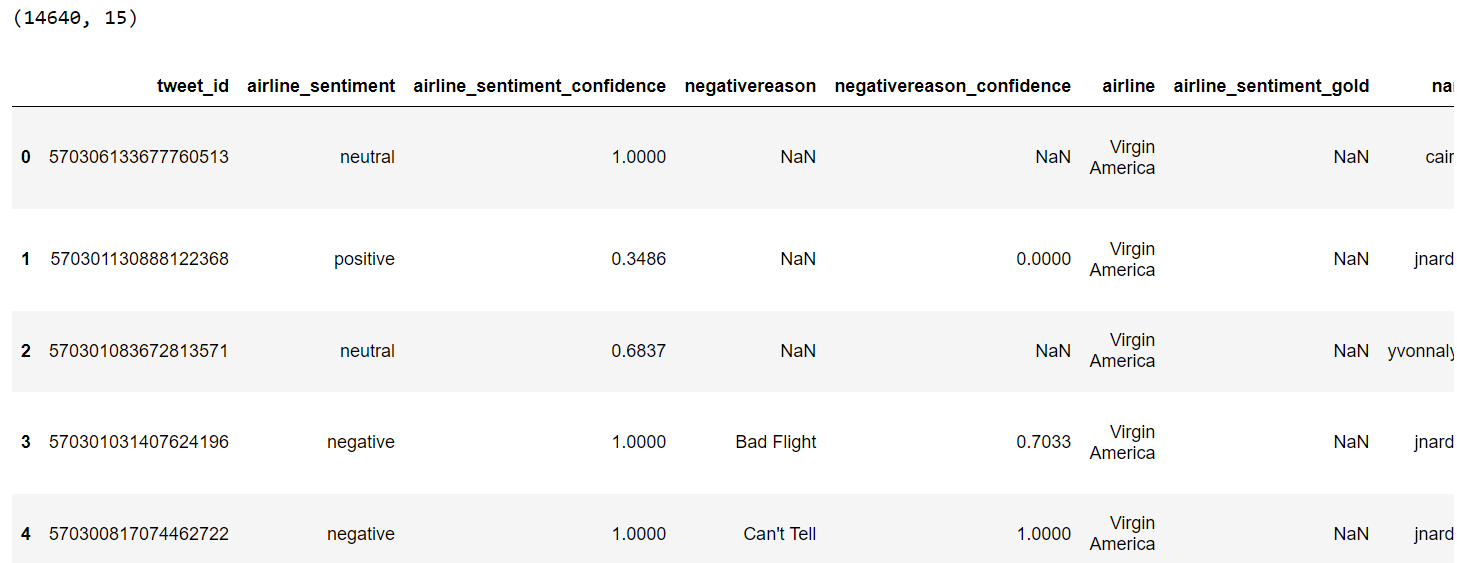

To compare the models, we will perform zero-shot classification on the Twitter US Airline Sentiment dataset, which you can download from kaggle.

The following script imports the dataset from a CSV file into a Pandas dataframe.

## Dataset download link

## https://www.kaggle.com/datasets/crowdflower/twitter-airline-sentiment?select=Tweets.csv

dataset = pd.read_csv(r"D:\Datasets\tweets.csv")

print(dataset.shape)

dataset.head()

Output:

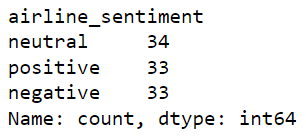

The dataset contains more than 14 thousand records. However, we will randomly select 100 records. Of these, 34, 33, and 33 will have neutral, positive, and negative sentiments, respectively.

The following script selects the 100 tweets.

# Remove rows where 'airline_sentiment' or 'text' are NaN

dataset = dataset.dropna(subset=['airline_sentiment', 'text'])

# Remove rows where 'airline_sentiment' or 'text' are empty strings

dataset = dataset[(dataset['airline_sentiment'].str.strip() != '') & (dataset['text'].str.strip() != '')]

# Filter the DataFrame for each sentiment

neutral_df = dataset[dataset['airline_sentiment'] == 'neutral']

positive_df = dataset[dataset['airline_sentiment'] == 'positive']

negative_df = dataset[dataset['airline_sentiment'] == 'negative']

# Randomly sample records from each sentiment

neutral_sample = neutral_df.sample(n=34)

positive_sample = positive_df.sample(n=33)

negative_sample = negative_df.sample(n=33)

# Concatenate the samples into one DataFrame

dataset = pd.concat([neutral_sample, positive_sample, negative_sample])

# Reset index if needed

dataset.reset_index(drop=True, inplace=True)

# print value counts

print(dataset["airline_sentiment"].value_counts())

Output:

Let's find out the average number of characters per tweet in these 100 tweets.

dataset['tweet_length'] = dataset['text'].apply(len)

average_length = dataset['tweet_length'].mean()

print(f"Average length of tweets: {average_length:.2f} characters")

Output:

Average length of tweets: 103.63 charactersNext, we will perform zero-shot classification of these tweets using GPT-4o mini, GPT-3.5 Turbo, and GPT-4o models.

We will define the find_sentiment() function, which takes the OpenAI client object, model name, prices per input and output token for the model, and the dataset.

The find_sentiment() function will iterate through all the tweets in the dataset and will perform the following task:

- predict their sentiment using the specified model.

- calculate the number of input and output tokens for the request

- calculate the total price to process all the tweets using the total input and output tokens.

- calculate the average latency of all API calls.

- calculate the model accuracy by comparing the actual and predicted sentiments.

Here is the code for the find_sentiment() function.

def find_sentiment(client, model, prompt_token_price, completion_token_price, dataset):

tweets_list = dataset["text"].tolist()

all_sentiments = []

prompt_tokens = 0

completion_tokens = 0

i = 0

exceptions = 0

total_latency = 0

while i < len(tweets_list):

try:

tweet = tweets_list[i]

content = """What is the sentiment expressed in the following tweet about an airline?

Select sentiment value from positive, negative, or neutral. Return only the sentiment value in small letters.

tweet: {}""".format(tweet)

# Record the start time before making the API call

start_time = time.time()

response = client.chat.completions.create(

model=model,

temperature=0,

max_tokens=10,

messages=[

{"role": "user", "content": content}

]

)

# Record the end time after receiving the response

end_time = time.time()

# Calculate the latency for this API call

latency = end_time - start_time

total_latency += latency

sentiment_value = response.choices[0].message.content

prompt_tokens += response.usage.prompt_tokens

completion_tokens += response.usage.completion_tokens

all_sentiments.append(sentiment_value)

i += 1

print(i, sentiment_value)

except Exception as e:

print("===================")

print("Exception occurred:", e)

exceptions += 1

total_price = (prompt_tokens * prompt_token_price) + (completion_tokens * completion_token_price)

average_latency = total_latency / len(tweets_list) if tweets_list else 0

print(f"Total exception count: {exceptions}")

print(f"Total price: ${total_price:.8f}")

print(f"Average API latency: {average_latency:.4f} seconds")

accuracy = accuracy_score(all_sentiments, dataset["airline_sentiment"])

print(f"Accuracy: {accuracy}")

First, Let's call the find_sentiment method using the GPT-4o mini model. You can see that the GPT-4o mini costs 15/60 cents to process a million input and output tokens, respectively.

client = OpenAI(

# This is the default and can be omitted

api_key = os.environ.get('OPENAI_API_KEY'),

)

model = "gpt-4o-mini"

input_token_price = 0.150/1_000_000

output_token_price = 0.600/1_000_000

find_sentiment(client, model, input_token_price, output_token_price, dataset)

Output:

Total exception count: 0

Total price: $0.00111945

Average API latency: 0.5097 seconds

Accuracy: 0.8

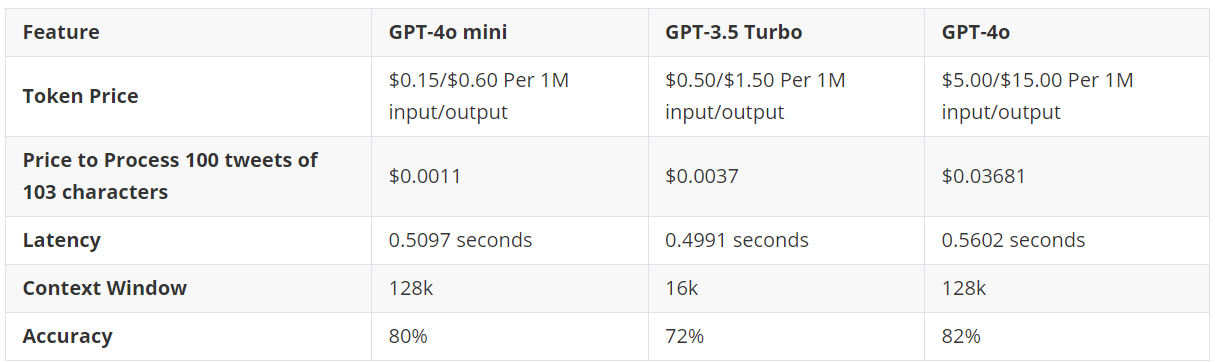

The above output shows that GPT-4o mini costs $0.0011 to process 100 tweets of around 103 characters. The average latency for an API call was 0.5097 seconds. Finally, the model achieved an accuracy of 80% in processing a 100 tweets.

Let's perform the same test with GPT-3.5 Turbo.

client = OpenAI(

# This is the default and can be omitted

api_key = os.environ.get('OPENAI_API_KEY'),

)

model = "gpt-3.5-turbo"

input_token_price = 0.50/1_000_000

output_token_price = 1.50/1_000_000

find_sentiment(client, model, input_token_price, output_token_price, dataset)

Output:

Total exception count: 0

Total price: $0.00370600

Average API latency: 0.4991 seconds

Accuracy: 0.72

The output showed that the GPT-3.5 turbo cost over three times as much as the GPT-4o mini for predicting the sentiment of 100 tweets. The latency is almost similar to that of the GPT-4o mini. Finally, the performance is much lower (72%) compared to that of the GPT-4o mini.

Finally, we can perform the zero-shot sentiment classification with the state-of-the-art GPT-4o.

client = OpenAI(

# This is the default and can be omitted

api_key = os.environ.get('OPENAI_API_KEY'),

)

model = "gpt-4o"

input_token_price = 5.00/1_000_000

output_token_price = 15/1_000_000

find_sentiment(client, model, input_token_price, output_token_price, dataset)

Output:

Total exception count: 0

Total price: $0.03681500

Average API latency: 0.5602 seconds

Accuracy: 0.82

The output shows GPT-4o has slightly slower latency than GPT-4o mini and GPT-3.5 turbo. In terms of performance, GPT-4o achieved 82% accuracy which is 2% higher than GPT-4o mini. However, GPT-4o is 36 times more expensive than GPT-4o mini.

Is a 2% performance gain worth the 36 times higher price? Let me know in the comments.

To conclude, the following table summarizes the tests performed in this article.

I recommend you always prefer the GPT-4o mini over the GPT-3.5 turbo model, as the former is cheaper and more accurate. I would go for the GPT-4o mini if you need to process huge volumes of texts that are not very sensitive and where you can compromise a bit on accuracy. This can save you tons of money.

Finally, I would still go for GPT-4o for the best accuracy, though it costs 36 times more than GPT-4o mini.

Let me know what you think of these results and which model you plan to use.