In my previous article I presented results comparing Anthropic Claude 3.5 Sonnet and OpenAI GPT-4o models for zero-shot text classification. The results showed that the Claude 3.5 Sonnet significantly outperformed GPT-4o.

These results motivated me to develop a simple retrieval augmented generation system with LangChain that enables the Claude 3.5 Sonnet model to answer questions pertaining to custom documents.

By the end of this article, you will know how to develop a chatbot that uses the Claude 3.5 Sonnet LLM to answer questions on custom documents.

So, let's begin without ado.

The following script installs the libraries required to run scripts in this article.

!pip install -U langchain

!pip install -U langchain-anthropic

!pip install langchain-openai

!pip install pypdf

!pip install faiss-cpu

Subsequently, the script below imports the required libraries into your Python application.

from langchain_anthropic import ChatAnthropic

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain_community.document_loaders import PyPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_openai import OpenAIEmbeddings

from langchain_community.vectorstores import FAISS

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain.chains import create_retrieval_chain

from langchain_core.documents import Document

from langchain.chains import create_history_aware_retriever

from langchain_core.prompts import MessagesPlaceholder

from langchain_core.messages import HumanMessage, AIMessage

import os

Let's first generate a default response using Claude 3.5 Sonnet LLM in LangChain.

You will need an anthropic API key which you can get here.

Next, create an object of the ChatAnthropic class and pass the anthropic API key, the model ID, and the temperature value to its constructor. The temperature specifies how creative a model should be while generating responses. Higher temperature values allow models to be more creative.

Finally, pass the prompt to the invoke() method of the ChatAnthropic object to generate the model response.

anthropic_api_key = os.environ.get('ANTHROPIC_API_KEY')

llm = ChatAnthropic(model="claude-3-5-sonnet-20240620",

anthropic_api_key = anthropic_api_key,

temperature = 0.3)

result = llm.invoke("Write a funny poem for an ice cream shop on a beach.")

print(result.content)

Output:

The LangChain ChatPromptTemplate class allows you to create a chatbot. The from_messages() method indicates that the conversation should be executed in message format. In this setup, you must specify the value for the user attribute, while the system attribute is optional.

You can use the StrOutputParser class to parse the model response in string format, as shown in the script below:

prompt = ChatPromptTemplate.from_messages([

("system", '{assistant}'),

("user", "{input}")

])

output_parser = StrOutputParser()

chain = prompt | llm | output_parser

result = chain.invoke(

{"assistant": "You are a comedian",

"input": "Write a funny poem for a music store on a beach."}

)

print(result)

Output:

Now you know how to call the Claude 3.5 Sonnet LLM in LangChain. In this section, we will augment the Claude 3.5 Sonnet model's knowledge, making it capable of answering questions related to the documents it had not seen during training.

We start by loading and splitting the document using PyPDFLoader. In this case, we load "The English Constitution" by Walter Bagehot from a URL.

The following script's load_and_split() method ensures the document is parsed correctly and divided into manageable sections.

loader = PyPDFLoader("https://web.archive.org/web/20170809122528id_/http://global-settlement.org/pub/The%20English%20Constitution%20-%20by%20Walter%20Bagehot.pdf")

docs = loader.load_and_split()

Next, we create embeddings for the text using OpenAI API's OpenAIEmbeddings class. We then split the text into smaller chunks with RecursiveCharacterTextSplitter and created a FAISS vector store using the split documents and their embeddings. This step transforms the text into a format suitable for retrieval and similarity search.

openai_key = os.environ.get('OPENAI_API_KEY')

embeddings = OpenAIEmbeddings(openai_api_key = openai_key)

text_splitter = RecursiveCharacterTextSplitter()

documents = text_splitter.split_documents(docs)

vector = FAISS.from_documents(documents, embeddings)

The next step is to define a prompt template using the ChatPromptTemplate class. The template instructs the model to answer questions based solely on the provided context, ensuring accurate and relevant responses. The create_stuff_documents_chain function links this template with the language model, forming a document chain that will be used for generating responses.

prompt = ChatPromptTemplate.from_template("""Answer the following question based only on the provided context:

Question: {input}

Context: {context}

"""

)

document_chain = create_stuff_documents_chain(llm, prompt)

We convert the vector store into a retriever object with vector.as_retriever() method. The retriever, combined with the document chain, forms a retrieval_chain. This setup enables the system to fetch relevant document sections based on the user's query and use them as context for generating answers.

retriever = vector.as_retriever()

retrieval_chain = create_retrieval_chain(retriever, document_chain)

Finally, we define the generate_response() function that takes a query as input, invokes the retrieval chain, and prints the answer.

def generate_response(query):

response = retrieval_chain.invoke({"input": query})

print(response["answer"])

To demonstrate the system in action, we run a few example queries as shown below:

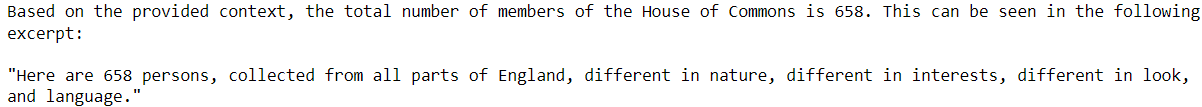

Query1:

query = "What is the total number of members of the house of commons?"

generate_response(query)

Output:

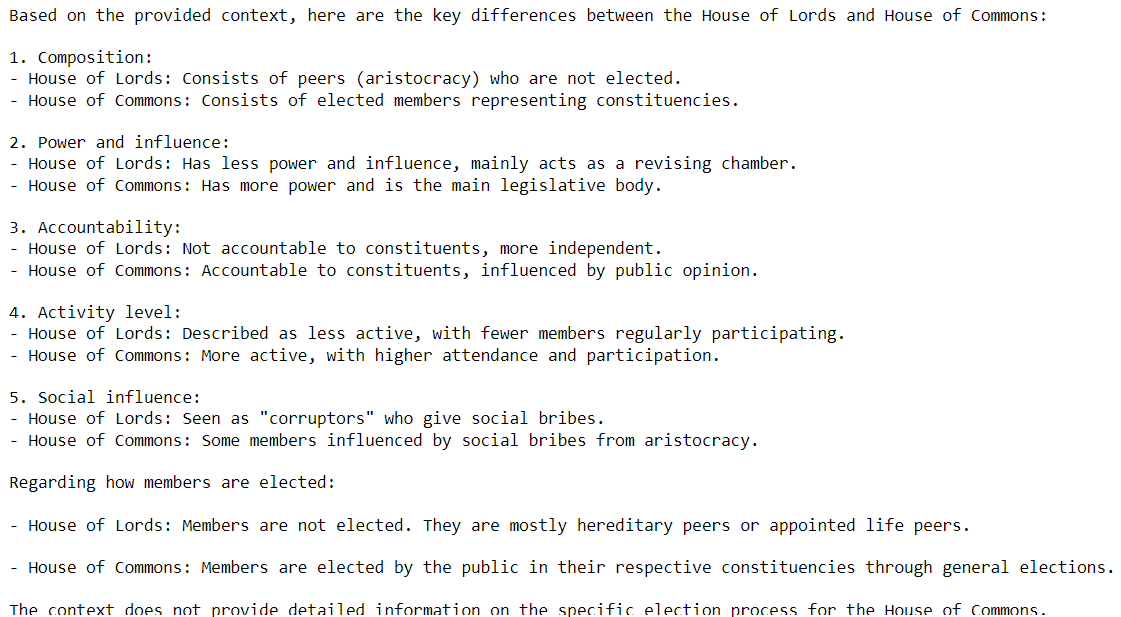

Query2:

query = "What is the difference between the house of lords and house of commons? How members are elected for both?"

generate_response(query)

Output:

Query3:

query = "How many players participate in a football game?"

generate_response(query)

Output:

You can see that the model correctly replied to queries related to custom document and refused to generate response to questions that are not related to the document.

The retrieval augmented generation (RAG) technique has revolutionized the development of customized chatbots for various data sources. In this article, we demonstrated how to build a chatbot using the Claude 3.5 Sonnet model to answer questions based on previously unseen documents. This method can be applied to create chatbots capable of querying diverse data types such as PDFs, websites, text documents, and beyond.

I encourage you to leverage Claude 3.5 Sonnet to develop your custom chatbots, explore its powerful capabilities, and share your experience and feedback in the comments section.